Alpilotx pointed me toward a thread on the org discussing Austin’s work on the weather system. The thread turned into a bit of a he-said-she-said with regards to Outerra and whether it could some day be combined with X-Plane.

This blog post will be a discussion of various general approaches to scenery and the trade-offs we have to consider, e.g. plausibility and realism, procedural vs. algorithmic and data driven design. But first, a brief note on Outerra. As I have said before, we are already aware of Outerra, so there is no need to email us. The bottom line is that we have a set of mostly done features for X-Plane 10, our goal is to finish X-Plane 10, and we are not even spending one brain cell considering putting a new rendering engine into X-Plane while we are trying to get 10.0 done.

Defining Some Terms

One of the problems with comparing scenery system approaches is that a real productized approach to scenery rarely fits into a perfect bucket or matches a single theoretical techniques. So here are some approximate terms, designed to generally describe an approach. They’re not going to be perfect fits, and even the definitions will fluctuate in different contexts and forums.

- We can say scenery is plausible when it looks like it might exist somewhere in the world. Plausible means that roads don’t go straight up over a cliff, trees don’t grow in the ocean, etc. In other words, plausible scenery is scenery where absurd things don’t happen. Plausible scenery is great when you don’t know what an area should look like. A lack of plausibility is often a bug.

- We can say scenery is realistic when it correlates closely with what is really present at a given location on the Earth. So if there really is a lake behind my house, realistic scenery has that lake. Plausible scenery might have a lake, a forest, or something else believable for where I live (the Northeastern United States). A giant sandy desert would not be plausible for my location.

- We can say scenery is procedural if the detail in the scenery comes from some kind of algorithm that produces results. For example, a fractal coastline is procedural.

- We can say scenery is data driven when the detail comes from some source of external input data. Our mountains are currently data driven – that is, the mountain shape basically comes directly from the DEMs we use.

- We can say scenery is artist driven if the look of the scenery comes from art assets created by an art team.

- We can say scenery is algorithm driven if part of its look comes from the transformational process that converts data from one form to another.

(I’m sort of drawing a line in the sand here with procedural vs. algorithmic, but what I’m trying to contrast is a program that generates ‘information’ out of thin air vs. a program that creates information out of other information. For example, in X-Plane 9, European capillary roads were procedural. We had no real data, so I wrote an algorithm that made them up in a manner that was consistent with underlying terrain. In version 10, these roads will be algorithmic; we take OSM data and then do some processing to make it suitable for X-plane. This is definitely a line in the sand kind of definition.)

So Are We Plausible or Realistic?

So the first question is: is the goal of X-Plane global scenery plausibility or realism? The answer is: a bit of both. Austin’s posts on the subject virtually always bring up plausibility. The reason for this is simple: he is not too worried about the amount of realism we’ve put into the scenery, but he is not happy with the bugs. He wants the bugs gone. So every time he and I speak, he says “and make sure it’s plausible!”

But we’re not going to remove realism just to fix plausibility bugs. I expect that the next global scenery render will be at least as realistic as the last – that is, we’re going to use better data and we’re not going to make up data where we had real information before.

There are limits to realism. We don’t expect the global scenery to ever be as realistic as a custom scenery package for a small matter. But realism does matter. Part of the joy of flying in a flight simulator is seeing the real world. Where we can have more realistic global scenery, we consider it to be a win, and we are always looking to be more realistic than the last render.

Plausibility for the version 10 render is going to take two forms:

- Bug fixes. Any time something screwy happens, it’s not plausible. Sometimes these are code bugs that must be fixed, and sometimes they are data conflicts. For example, the water data sasys “water” but the elevation data says “hill”. Combine them and you get water going up a hill. We have to write code to resolve this, somehow.

- We are reworking the way cities are rendered, because even at their best, the old approach, procedural buildings with algorithmic roads over land class photos, did not look plausible, even at its very highest setting. So this is a feature request to fix a plausibility problem.

Algorithmic or Procedural

I’ve discussed this before (and forgotten about the post). But to expand the discussion, we need to consider not only algorithmic and procedural data processing, but whether we are driven by procedural generation, input data, assets created by artists, or some combination. (In practice, all systems require a mix of data, art assets, and procedures and algorithms, it’s a question of the blend.)

I’ve been working on global scenery for a few years now, and over time I’ve come to appreciate the importance of artist input (via art assets) into any scenery process. Simply put, if you want scenery to look good, you need to make it reasonably straight forward for people who are good at making pretty pictures to control the look of your visual results. A few years ago I viewed the scenery process as strictly a question of data conversion and visualization, but now I see it as finding a way to merge art assets and data into a cogent final product, with the art assets being used in a way that the artists can control. In practice, this often means making sure that the art assets come in a format that artists are comfortable with or can learn without too much pain.

As I said in the previous post, our approach is becoming more algorithmic and less procedural as higher quality source data becomes available. (For example, we don’t have to generate European roads when we can import and reprocess them.) But our approach over time has always been heavily artist driven. By this I mean: our input data is algorithmically processed into a final form that makes sense only in the context of art assets, and we have a pretty good idea of what those art assets will look like when we design the algorithms. To use roads as an example again, our task with OSM is to convert OSM road data into a road network that will visualize nicely with road art assets created by an artist.

Procedural Compression

One way to view procedural scenery is “creating lots of information from little or no information”. But another wa

y to think of it is as a compression technology. As was correctly pointed out on the org forums, you use less storage specifying the overall location of a forest than you do specifying every tree individually. The compressed form (store the forest location) can be equally plausible. It will be less realistic if the original tree locations were based on real world data, but it will be equally (unrealistic) if the original tree locations were procedurally generated. Put another way, pushing procedural processes out of the scenery generation process and into the flight simulator makes DSFs smaller.

When I first started working on X-Plane 8 DSF scenery, not only was DVD size a factor, but so was load time; we had one core and it wasn’t a very fast core. Anything we could do to make loading faster, we did. Thus we pushed a lot of work into the scenery generation process, including procedural processes, to keep load time down.

Times have changed; we now have dual core machines as a baseline, and often quite a few more cores. Thus over time we are starting to move procedural processes back into the simulator, trading load time (which runs on multiple cores) for generation time and file size. So perhaps a more accurate statement would be: our scenery generation process is becoming more algorithmic and less procedural, and X-Plane itself is becoming more procedural. This is driven both by more input data (which must be processed up front) and more compute power on the host (which lets us shrink file size, and thus use DVD space for other things).

X-Plane 10

Here’s how this plays out in practice in version 10:

- Some (but not all) of the building placement work* has been moved into X-Plane; a bit of expensive precomputation is still done at DSF generation time.

- Some (but not all) of road processing has been moved into X-Plane; a lot is still done at DSF generation time.

- Where possible, we are moving from a multi-layered approach to terrain to a pixel-shader-based approach to terrain. This cuts down overdraw and uses the GPU more efficiently. (The simplest example: in X-Plane 8 and 9, cliffs have separate terrains from hills. In X-Plane 10, a single terrain sits on both the cliff and the hill and changes its appearance based on the actual slope; this texture change is computed by the GPU.)

In other words, X-Plane 10 is making the logical evolution to better balance the computing resources we have to improve plausibility and realism.

At this point I can say with 99% confidence that X-Plane 10 will feature bezier curved roads. In X-Plane 9, a road is a line segment; you can simulate curved roads by using a lot of line segments, but the global scenery roads are pretty chunky.

X-Plane 10 allows for a road to be a bezier curve, allowing the specification of smooth curves with a small amount of data. This sets us up to trade off visual quality and performance using a rendering setting.

A few notes for authors:

- Like all of the new v10 road features (and pretty much all of the new v10 scenery features), you don’t have to use bezier curves in your roads. They are there as an option if you want them.

- X-Plane 10 will not make curves for you; road data that is defined as line segments in the DSF will be rendered as line segments. (This follows the principle that DSFs contain pre-processed scenery data, and the sim shows DSFs exactly as they are written.)

Pay No Attention to the Documentation

The DSF specification alludes to bezier curved roads; this “old way” of encoding curves was never supported in the sim – all versions of X-Plane ignore this data. The “old way” was how we thought we might do curves some day.

The version 10 curve encoding is different; the “old way” will continue to be ignored in version 10. So: do not use the DSF spec to try to make curved roads now. I will post detailed documentation on curved roads once version 10 is available to authors.

I have been stingy with pictures of next-gen global scenery for one reason: it’s really hard to get a nice shot of the global scenery that doesn’t show unfinished features. With something like global lighting I can zoom in and show just the new trick, but with global scenery, I can’t take a picture of a new house without showing a city block that looks funky due to a bug and a road that isn’t finished. Posting a working shot of the global scenery where some sub-systems have bugs and artifacts would just freak everyone out.

I figure if it’s obvious that the shot isn’t a production shot, I can get away with posting it though.

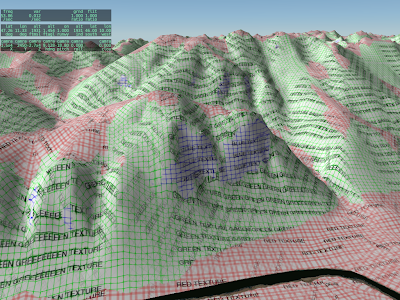

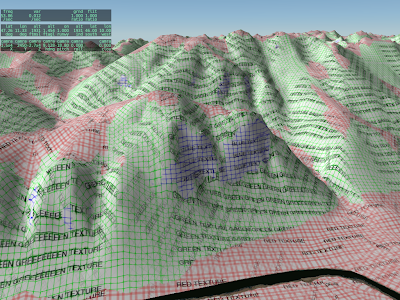

A lot of the times when I work on the rendering engine, it is with test textures like this. Our art team does their best to hide the seams between different art assets, so that the scenery looks like one continuous world. The problem for me is that the better they do, the harder it is for me to tell if the underlying shaders are doing what they should do.

So alpilotx sent this test: it’s all of the Innsbruck area painted with a test texture. What’s new and interesting here is that the flat, hill, and cliff areas are all shaded by a single shader that selects between multiple textures (and rotates the textures) based on the underlying mesh.

We are adding the cliff shader to version 10 for a few reasons:

- Often we can get better cliff and hill definition by processing in the shader than by painting different triangles with different textures; our ability to control the transitions using different .ter files is limited.

- Using one slope-sensitive shader saves over-draw and triangle count, which makes the DSFs faster and smaller.

- Some day we may have the GPU distorting mountains on the fly to make them more mountainous. If we do, we need the GPU to also apply the correct textures; if the cliff areas are precomputed then they won’t respond to GPU distortion.

This post is a bit of an experiment…we’ll see how it goes. When we cut the global scenery, we do a number of validations: we manually inspect some of the DSF tiles, we examine the Earth orbit textures (which are derived from the DSFs) as a quick way to look roughly at all of the tiles, we have a number of internal consistency checks in the generator, and we can compare our output to some of the input data (e.g. did we lose all of our water) to look for gross bugs. But we don’t have enough resources to manually examine all 18,000+ tiles in detail inside X-Plane.

So: if you have found a defect in a global scenery tile in version 9, please list the tile in the comments section of this post. I will try to give these “previously broken” tiles priority in manual inspection.

Note that the bugs we can expect in version 10 will be very different than in version 9 because we’ve really changed the global scenery generation process in nearly every important way. The underlying algorithms are heavily rewritten and data sources are very different. The goal here is to simply find areas that might have a higher probability of weirdness.

Let me try to be clear about what constitutes a broken tile and what does not. Please read this definition six or seven times before you post a comment.

- Do not report bugs of inaccurate data. If your favorite road is missing, or the coastline is in the wrong place, don’t report that here. That is not a defect in the rendering process; rather it is a limitation in the source data. I am not trying to collect a list of data inadequacies. We have already selected the new data for the new render. “Stuff is in the wrong place” is not a bug for this list!

- Really weird patterns are of interest. For example: I have seen some reports of very long thin bars of land going perfectly north along the edge of the tile, or a comb of mountain and valley, again perfectly vertical. These are bugs in the production process and I do want to know about them!

- Do not report errors in the placement of the 3-d layers (trees, roads, etc.). Since the 3-d layer is being completely rebuilt for version 10, none of these defects will be present in version 10. (They’ll be replaced with a totally new set of weird behaviors!)

- If an airport is too bumpy to use (with “flatten ” turned off in the sim), report this only if the terrain around the airport is grass. Basically the grass means that we tried to “fix” the airport in the v9 render and failed. If there is no grass (the airport is over forest or city) and it is bumpy, do not report it – that means it was added to apt.dat later.

- Do not report mismatches between the airport shape and the grassy patch; this is just the apt.dat file being updated.

If you have something to report (basically incorrect flattening of grassy airport areas and really weird coastline/terrain bugs that are clearly programming errors) please include three pieces of information:

- The DSF tile, e.g. +42-072

- The nearest ICAO of an airport near the bug (e.g. KBED)

- A very short description of what went wrong (e.g. “tall thin vertical lakes running through the entire DSF”).

Please only post defects of the above nature in the comments section of this blog post; to keep things clean I am going to nuke any off-topic comments on this post.

EDIT: see the first Squawk, Arista’s report on LOWS. This is a perfect report: it includes the DSF tile, the ICAOs, a brief but clear description, and it is a bug in how we process the data, not the data itself.

A quick thought on procedural vs. algorithmic scenery: there was some discussion on the X-Plane dev list about procedural terrain; the Outerra screen shots have stirred up interest.

There is a fundamental difference between what you see with Outerra and what we do with our global scenery:

- Typically procedural scenery is based on the idea of ‘amplifying data’ – that is, making a little bit of data look more interesting by applying a recursive algorithm to “generate” detail.

- Algorithmic scenery involves taking a large amount of unique input data and translating it; the detail comes from not losing information in the source data.

The key difference is whether the resulting scenery comes from a process that “creates” information through a ‘procedure’ or “translates” information.

Our scenery process does a bit of both, but we are more in the algorithmic camp than procedural camp; the global scenery is produced from hundreds of GB of input data, and we are constantly looking for better input data to create more interesting and accurate output scenery.

In fact, I would say that we are becoming more algorithmic and less procedural. In version 9, the urban roads in non-US cities are “procedural” – an algorithm generates them from the terrain data, an algorithm, and some random noise. For version 10, we are importing OSM.

One thing I have noticed in the work on version 10 global scenery is that we’ve finally crossed a line. With version 9, the question was ‘what is the best data we can get’. With version 9, the question is ‘how much information can we keep’; we’ve reached a point where the resolution of our input data is so much higher than what can go on DVD, that it’s a question of filtering down, not synthesizing up the resolution.

This is two pictures of “tilings” of OpenStreetMap for use in global scenery. I downloaded a OSM new planet extract about a month ago; in the 11 months since I last grabbed it, the data size has grown 56%! The new, larger file required some changes to my extracting code. After much debugging, I was able to see this in QGIS:

The first picture is 1×1 tiles, which are derived from the second picture (10x10s). You’ll see some “ragged” edges. This is because the cutting scheme leaves whole roads of interest in one piece even outside the tiling bounds. Later, more sophisticated code crops the road when the actual DSF is built.

The OSM processing tools are part of the open source scenery tools; I will get my changes checked in to source control over the next few days, although my code is only one of dozens of programs for bulk processing OSM.

The X-Plane version 8/9 default scenery uses raster land use data (that is, a low-res grid that categorizes the overall usage of a square area of land) as part of its input in generating the global scenery. When you use MeshTool, this raster data comes in the .xes file that you must download. So…why can’t you change it?

The short answer is: you could change it, but the results would be so unsatisfying that it’s probably not worth adding the feature.

The global scenery is using GLCC land use data – it’s a 1 km data set with about 100 types of land class based on the OGE2 spec.

Now here’s the thing: the data sucks.

That’s a little harsh, and I am sure the researchers tried hard to create the data set. But using the data set directly in a flight simulator is immensely problematic:

- With 1 km spatial resolution (and some alignment error) the data is not particularly precise in where it puts features.

- The categorizations are inaccurate. The data is derived from thermal imagery, and it is easily fooled by mixed-use land. For example, mixing suburban houses into trees will result in a new forest categorization, because of the heat from the houses.

- The data can produce crazy results: cities on top of mountains, water running up steep slopes, etc.

That’s where Sergio and I come in. During the development of the v8 and v9 global scenery, Sergio created a rule set and I created processing algorithms – combined together, this system picks a terrain type from several factors: climate, land use, but also slope, elevation, etc.

To give a trivial example, the placement of rock cliffs is based on the steepness of terrain, and overrides land use. So if we have a city on an 80 degree incline, our rule set says “you can’t have a city that slanted – put a rock face there instead.”

Sergio made something on the order of 1800 rules. (No one said he isn’t thorough!!) And when we were done, we realized that we barely use landuse.

In developing the rule set, Sergio looked for the parameters that would best predict the real look of the terrain. And what he found was that climate and slope are much better predictors of land use than the actual land use data. If you didn’t realize that we were ignoring the input data, well, that speaks to the quality of his rule set.

No One Is Listening

Now back to MeshTool. MeshTool uses the rule set Sergio developed to pick terrain when you have an area tagged as terrain_Natural. If you were to change the land use data, 80% of your land would ignore your markings because the ruleset is based on many other factors besides landuse. Simply put, no one would be listening.

(We could try some experiments with customizing the land use data..there is a very small number of land uses that are keyed into the rule set. My guess is that this would be a very indirect and thus frustrating way to work, e.g. “I said city goes here, why is it not there?”)

The Future

I am working with alpilotx – he is producing a next-gen land-use data set, and it’s an entirely different world from the raw GLCC that Sergio and I had a few years ago. Alpilotx’s data set is high res, extremely accurate, and carefully combined and processed from several modern, high quality sources.

This of course means the rules have to change, and that’s the challenge we are looking at now – how much do we trust the new landuse vs. some of the other indicators that proved to be reliable.

Someday MeshTool may use this new landuse data and a new ruleset that follows it. At that point it could make sense to allow MeshTool to accept raster landuse data replacements. But for now I think it would be an exercise in frustration.

The global scenery gets cut on “the renderfarm”, which is our name for the cluster of computers, each loaded with all of the input data for the global scenery. These computers chug for a few days to churn out the whole planet.

With the next global render I am trying an experiment: using one 8-core Mac Pro as the RenderFarm. We’ve never had more than 8 processors in the farm at a time; in the past to get 5 processors might have taken 3 machines. The appeal of a single machine is ease of setup; no data to sync between machines, no sorting out which machine did which tile and merging it all back.

Today I upgraded the Mac Pro’s memory (again) to 12 GB. I thought the logic of why I did this might be of interest to X-Plane users who are trying to figure out “should I have more memory”?

Basically there are three memory limits we care about:

- The virtual memory limit per process – generally 3 GB per process for a 32-bit application. If an application wants more memory tan this, regardless of what you have, it is dead.

- The virtual memory limit for the whole machine. Since the machine virtual memory limit is a function of hard disk space, normal users will never care about this – we can have a huge amount of virtual memory.

- The physical memory actually used by the sum of all programs actually running. Once all programs need more physical memory than you have, they start using hard drive, and they get really, really, really slow.

In the case of the render farm, our processing program runs on one DSF tile at a time. But with 18,000+ tiles we can take advantage of more cores by processing 8 tiles (using 8 copies of the program running at once) on 8 cores.

This is where memory comes in. Before the upgrade my machine had 4 GB of memory, allowing each of the 8 tiles to use a little bit less than 512 MB of RAM before we ran out of physical memory and started paging. (The OS takes a little bit for itself.)

Normally this is all good — a typical run might only use 300 MB of RAM. But every now and then we hit New York City or Boston and the RAM use spikes out to 1500 MB or more.

This is okay if just one process hits New York, but what if a bunch of processes hit a big city at once? We blow past the physical RAM of the machine and every tile becomes slow at once. And because they are all slow at once, they take a very long time (hours) to clear this state.

(This state of thrash due to many processes is like 8 people trying to go through a doorway at once. If they would just take turns, they’d be through in a second. But by forcing 8 at once they get stuck. The operating system won’t pause a few of the high-memory programs to let the others complete.)

Since the RenderFarm has to run overnight unattended, I upgraded RAM to 12 GB, for 1500 MB per rpocess before thrash. My hope is that for this investment, we’ll be able to run the processing through without a human to unjam it.

What About X-Plane

So would a RAM upgrade for X-Plane help? Well we can apply what we know from above to figure it out. Generally, you need enough RAM that X-Plane + all other programs won’t run out of physical memory. Since X-Plane is 32-bit (and can only use 3 GB of RAM) you are likely to be fine with 4 GB when running X-Plane + the OS. Any more and X-Plane can’t take advantage.

The exception might be if you need to run X-Plane and another big program like PhotoShop at the same time. At that point you might want enough RAM for both to run at the same time.

I’ve been working with OpenStreetMap (OSM) data this week. The great thing about OSM (besides already containing a huge amount of road data) is that you can edit and correct data – that is, OSM manages the problem of “crowd sourcing” world map source data.

I get email from people all the time, saying “how can I help fix my local area of the global scenery”. With OSM, you can help., by improving OSM’s source data.

Here are two things that will matter in the quality of generated scenery:

- The oneway tag. Roads that are one-way need to have this tag, or the conversion to X-Plane might have an incorrect two-way road in place. If you don’t see the one-way arrows on the OSM map rendering then this tag might be missing.

- The layer tag. When roads cross, “layer” tells OSM which one is on top (and that they do not intersect). Similarly, if a highway is underground, it’s because it has a negative layer. If the layer tag is missing, complex intersections will probably render as junk.

In the US, a lot of OSM is built off an import of the TIGER census road map. Unfortunately TIGER in its previous released form does not contain one-way or layering information. So particularly for US cities, adding these tags will improve the road rendering a lot!

A few days ago, the ASTER GDEM was released. Basically ASTER GDEM is a new elevation set with even greater coverage than the SRTM. Basically both SRTM and ASTER (I’ll drop the GDEM – in fact ASTER prodcues more than just elevation data, but the elevation data is what gets flight simmers excited right now) are space-based automated measurement of the earth’s height. But since ASTER is on a satellite (as opposed to an orbiting space shuttle) it can reach latitudes closer to the poles.

So what does this mean for scenery? What does it mean for the global scenery? A few thoughts:

-

ASTER data is not yet very easy to get. You can sign up with the USGS distribution website but you’re limited to 100 tiles at a time, with some latency between when you ask and when you get an FTP site. Compare this to SRTM, which can be downloaded automatically in its entirety, or ordered on DVD. ASTER may reach this level of availability, but it’s not there yet.

-

ASTER is, well, lumpy. (Nasa says “research grade”, but you and I can say “lumpy”.) Jonathan de Ferranti describes ASTER and its limitations in quite some detail. Of particular note is that while the file resolution is 30m, the effective resolution of useful data will be less.

SRTM has its defects, too, but ASTER is very new, so the GIS community hasn’t had a chance to produce “cleaned up” ASTER. And clean-up matters; it only takes one really nice big spike in a flat flood plane to make a “bug” in global scenery. I grabbed the ASTER DEMs for the Grand Canyon. Coverage was quite good, despite the steep terrain angle (steep terrain is problematic by design for SRTM) but there were still drop-out areas that were filled with SRTM3 DEMs, and the filled-in area was noticeable.

-

By the numbers, ASTER is not as good as NED; I imagine that other country-specific national elevation datasets are also both more accurate and more precise than ASTER.

-

The licensing terms are, well, unclear. The agreements I’ve seen imply a limited set of research uses for the data. The copyright terms are not well specified.

So at this point I think ASTER is a great new resource for custom scenery, where an author can grab an ASTER DEM in a reasonable amount of time, check it carefully, and thus have access to high quality data for remote parts of the Earth, particularly areas where locally grown data is not available or not high quality.

In the long term, ASTER is a huge addition to the set of data available because of its wide-scale coverage of remote areas, and because it can fill holes in SRTM. (ASTER and SRTM suffer from different causes for drop-outs, so it is imaginable that there won’t be a 1:1 correlation in drop-outs.)

But in the short term, I don’t think ASTER is a SRTM replacement for global scenery; void-filled SRTM is a mature product, reasonably free of weirdness (and sometimes useful data). ASTER is very new, and exciting, but not ready for use in global scenery.