To answer the most basic questions:

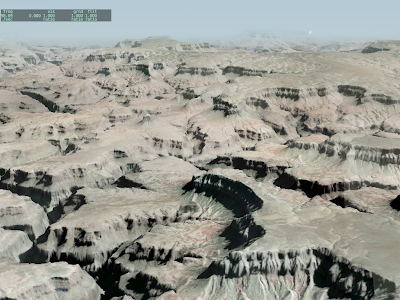

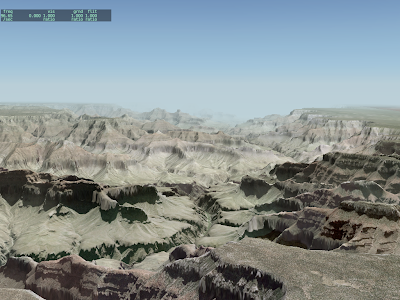

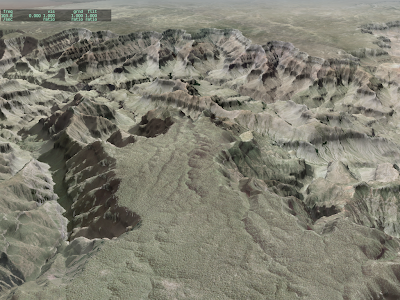

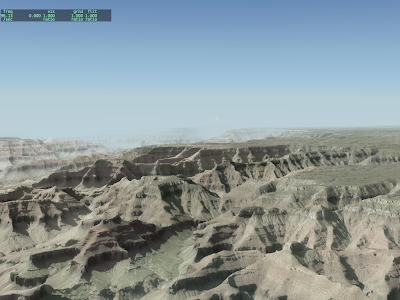

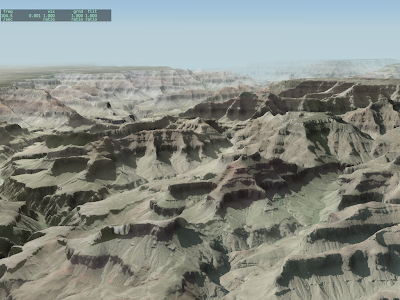

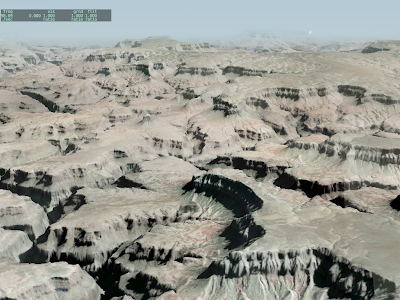

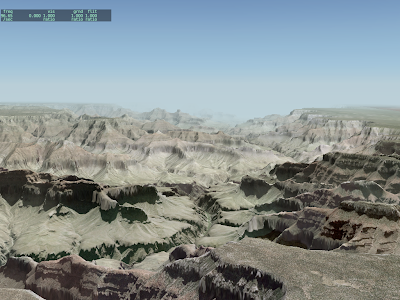

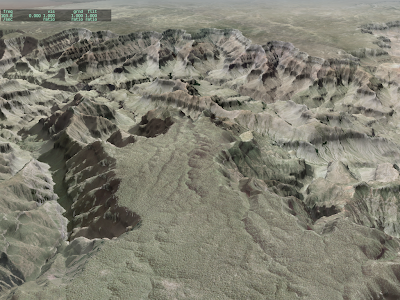

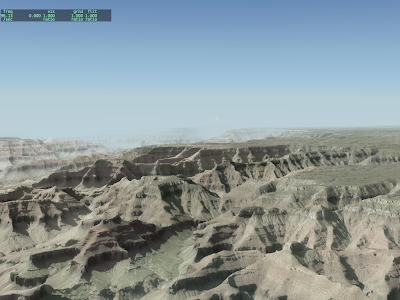

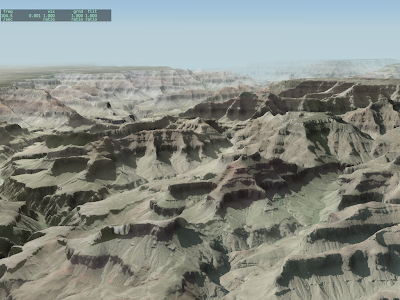

- This is a base mesh orthophoto scenery I made with MeshTool, as a test.

- The source DEM is 10m NED, the source imagery is 1mpp DOQQ, down-scaled to 3mpp. I gave MeshTool a point budget of 500,000 mesh points per DSF tile, and it used them all.

- This version of MeshTool (2.0 beta 2) should be out in the next 3 days.

- That’s X-Plane 930RC4. The framerate really is around 100 fps.

- There are no dynamic real-time shadows. Rather, the orthophotos have the shadows “baked” in because they’re photos.

- There are artifacts at the joins of the orthophotos because I spent time fixing projection errors.

Clearly we need more than 25 nm visibility in some cases!

In the next X-Plane 930 beta (it should post today I think) the rendering settings have two new check-boxes: one to enable the “dynamic” airplane shadow and one to enable per-pixel lighting.

In the last week a number of users emailed me performance numbers via the fps test, and from what I can tell, 99% of performance problems can be attributed to these two new features chewing up resources in a way that 922 did not. When the features are both disabled, from what I can tell, the sim should be as fast or faster than 922.

The new beta will also limits dynamic shadows to your aircraft – beta 13 will calculate a dynamic shadow for every aircraft, which is unacceptably slow when you have a lot of AI planes enabled.

I may still be able to improve the performance of the per-pixel-lighting shader, but fundamentally per-pixel lighting is going to be more expensive than per vertex. The average X-Plane scene might have 200,000 to 500,000 vertices. At absolute minimum resolution, no FSAA, you’re going to have over 700,000 pixels even if there is no “over-draw” – you could easily have 10x that fill rate with only a modest increase in overdraw, full-screen anti-aliasing and window size. Simply put, per-pixel lighting is more work.

Please bear in mind: without per-pixel lighting X-Plane’s pixel shader is extremely simple. If you have a “low-end” card this could give you the illusion of GPU power when there is really not much under the hood.

Examples of low-end cards: GeForce 7300, GeForce 8400, GeForce 9400, Radeon X300, Radeon X1300, Radeon HD2400. All of these cards are the younger brother of a fairly capable card, but with fewer pixel shader units/cores. If each unit is doing very little work, you don’t need that much pixel-filling power…but when we go to a “real” shader, the difference between a GeForce 8400 and 8800 becomes very, very apparent. Simply put, even with optimization the GeForce 7300 (for example) will never run a huge monitor with per pixel lighting and high FSAA.

In a previous post (in which I tried to argue that threading is a “how” and not a “what” when it comes to feature requests) a user made this comment:

That is, that I feel you are a bit too concerned about the fact that XP has to be possible to run on a 2001 year machine. This really halts the development although you could add options to turn this and that off.

I’d like to side-step around the details of cost-benefit analysis (e.g. do the sales from low-end systems pay for the development of a renderer with lower system requirements) but take a second to focus on three general issues:

- Is there a cost to developing a scalable renderer?

- How does the trend of hardware development affect hardware?

- How do marketing forces affect both of the above?

Scalability

Is there a cost to writing a renderer that can run on a wide range of hardware? Absolutely. Obviously we have to write more code to do that.

But there is an additional cost: there are some rendering engine design decisions that have to be made system-wide. It’s not practical to provide different scenery files for different hardware (since we are limited by distribution on DVD). In some cases we have to pick a non-ideal data layout (for the highest end hardware) to support everyone.

But: before you raise up arms against your fellow X-Plane user who is holding you down with his GeForce 2 MX and P-III 800 mhz machine, bear in mind that the problem of picking a data format is a bit unavoidable. Even if we targeted the highest-end machines and told everyone else to jump in a lake, those decisions would appear to target rather quaint machines only a year into the version run. At some point we have to pick a line in the sand.

There is some light at the end of the tunnel when it comes to scalability: as computers become (on average) bigger and faster, we can start to defer at least a little bit of the work of scenery generation to while the sim is running. When we first designed the new sceney system (for X-Plane 8) most users did not have dual-core machines, so the doing work on the scenery was very expensive. We preprocessed as much as possible. This isn’t as necessary any more.

So are high-end users limited by having one renderer that fits all sizes? Perhaps a little bit, but any design choice is only going to fit one hardware profile perfectly, and hardware is a moving target; today’s shiny new toy is tomorrow’s junk.

Hardware Growth

Every two years (to be very loose about things) the number of transistors we can pack on a chip doubles. This “transistor dividend” can be turned into more cores for a CPU, or more shading units (which are now really just cores) for a GPU.

And this gets to the heart of why I don’t think we can say “forge the low-end” any time soon. Imagine that we support 6 years of hardware with X-Plane, and the best hardware is 8 times as powerful as the low-end hardware. Fast-forward two years – we drop two-years of hardware and two-years of new ATI and NV graphics cards come out. What is the result?

Well, the newest hardware is still 8x as powerful as the old hardware, but the difference in the polygon budget between the two has now doubled! In other words, the gap in absolute performance is doubling every two years, driving the two ends of our hardware spectrum farther apart. (Absolute performance is what Sergio and I have to worry about when we design a new feature. How many triangles can we use is an absolute measurement.)

If we say “okay forget it, only 3 years of supported hardware” that gets us out of jail for a little while, but eventually even the difference between the newest and slightly off-the-run hardware will be very large.

A gap in hardware capability is inevitable and it will only get worse!

Market Divergence

You may have noticed that the above paragraph makes a really gross assumption: that the lowest end hardware we support is the very best card on the market from a certain number of years ago. Of course this isn’t true at all. The lowest end hardware we support was probably pretty lame even when it was first made. The GeForce FX 5200 was never, even for a microsecond, a good graphics card. (It was, however, quite cheap even when first released.)

So the gap we really have is between the oldest low-end and newest high-end hardware, which is really quite wide. Consider that in May 2007 the GeForce 8800 Ultra was capable of 576 GFLOPs. Two months later (July 2007) the GeForce 8300 GS was released, packing a whopping 22 GFLOPs. In other words, in one video card generation the gap between the best and worst new card NVidia was putting out was 26x! (I realize GFLOPs isn’t a great metric for graphics card performance – really no one metric is adequate, but this example is to illustrate a point.)

Let’s go back in time a few years. In February 2002, NVidia released the GeForce 4 Ti (high-end) and MX (low-end. The slowest MX could fill 1000 MT/s, while the fastest Ti could fill 2400 MT/s. That’s a difference in fil rate of “only” 2.4x.

What’s going on here? Commodification! Simply put, graphics cards have reached the point where a lot of people just don’t care. Very few users need the full power of a GeForce 8800, so a lot of lower-end machines are sold with low-end technology – more than adequate for checking email and watching web videos. This creates a market for low-end parts and creates a wider “gap” for X-Plane. Dedicated returning X-Plane users might do the research and buy the fastest video card, but plenty of new users already have the computer, and it might have something unfortunately (like a Radeon X300 or Intel GMA950) already on the motherboard.

As X-Plane’s hardware needs diverge from the needs of mainstream computer users, we can expect some but not all of our users to have the latest and greatest. We can also expect plenty of new users to have underpowered machines.

Let me go out on a limb (I am not a technologist or even a hardware guy, so this opinion isn’t worth the bits it is printed on) and suggest this: we’re going to see a commodification fall-off in the number of cores everyone has too. Everyone is going to have two cores because it is cheap to put a second core on the main CPU if it lets you get rid of a whole array of special-purpose hardware. Give me multi-core and maybe I can get away with software-driven rendering (who needs hardware acceleration), software-driven sound (goodbye DSP chips), maybe I can even find cheaper ways to build my I/O. But 16 cores? The average user doesn’t need 16 cores to check email and run Windows 7.

So as transistors continue to shrink and it becomes possible to pack 8 or 16 cores on a die, I expect some people to have this and others not to. We’ll end up in the same situation as the graphics chips.

Summing It Up

To sum it up, sure there may be some drag on X-Plane in supporting a wider range of hardware. But it’s an inevitable requirement, because hardware shifts in capability even during a single version run, and as hardware becomes faster, the gap between -end and cheap systems gets wider.

Over and over, whether it is a feature request list for X-Plane or another simulator, I see the same thing: “multi-core support” or “multi-threading” as a feature request.

Now before I continue, I must remind everyone: X-Plane is already multi-threaded and will take advantage of multi-core hardware. How much we use those cores depends on the type of scenery loaded.

The problem is that multi-threading (as a way to use multi-core hardware) is a solution technique, not a problem statement. What is threading going to be used for? If I simply program the other 7 cores of your computer to calculate PI to 223,924 digits have I met the feature request? This probably isn’t what anyone wants.

Implicit in the request for multi-core is (I speculate) a request for better frame-rate. (I did see one user who wanted multi-core to be used for a more accurate flight model. This strikes me as a poor trade-off for hardware based on my understanding of the flightmodel – we would use a lot of hardware for only a marginal accuracy improvement – but I commend the user for stating the problem and not just a possible solution.) But is multi-threading the best way to get framerate?

If I had two patches to X-Plane, one that doubled fps by using two cores and one that doubled fps by using more efficient code, which would be better? To me the obvious answer is: the code that is more efficient. It will run on any hardware (not just multi-core) and if you have multi-core hardware, we still have that second core free for some other functionality.

So to me the feature request should be something like: “higher framerate – and yes I have multi-core hardware”. Or perhaps “more visual detail at the same framerate – and yes I have multi-core hardware”.

All feature requests need to be in terms of problem statements, not possible solutions. This lets us find the set of problems that can be solved together in a coherent manner, and it lets us pick a solution that meets our engineering goals.

I’ve had a little bit of time to look at X-Plane 930 performance. The data isn’t 100% conclusive yet, but one performance issue sticks out like a sore thumb: per-pixel lighting hurts fps.

Now, part of this is that the per-pixel lighting shaders are not yet optimized (and perhaps are not terribly well written). I need to take some time to see if I can get some more performance out of them.

But…per-pixel lighting isn’t free – when per-pixel lighting is on, the video card is simply doing a lot more work than it used to. Consider: a typical X-Plane scene might have 250,000 vertices on screen at once. At a minimum, you have at least 750,000 pixels on screen*. Make your window bigger and that number goes up – fast! Turn on 16x FSAA and watch the pixel count get even larger. So the number of lighting calculations done by your graphics card are at least 3x higher with per-pixel lighting and potentially 50x higher. Even if your graphics card has a lot of power, that’s going to cost a bit.

So one option I am considering is making per-pixel lighting a rendering option. This would allow users who want 922-level fps to simply turn it off. In my tests so far, turning off per-pixel lighting gets fps to within a few percent of 922.

(The only reason to have shaders on but per-pixel lighting off would be to have a cheap version of the reflective water. In the long term I want to limit the number of a la carte rendering settings, but for now it seems reasonable to support v9.00 base configurations through the entire version run.)

* In practice, not every pixel on screen requires full shading, e.g. the sky does not require complex shading. But some parts of the screen may be shaded multiple times. This is called “overdraw”. For example, with a runway we pay for our shaders twice – first with the ground underneath the runway, then with the runway itself.

I have received a number of emails bringing up crashes and performance problems in the X-Plane 930 betas – some of the writers are concerned that 930 might be a lame patch, going final with crashes and lousy performance.

To assuage this concern, let me make a few comments on where we are in the beta process, the likely future schedule, and the problems themselves.

The Schedule

X-Plane 930 has been an absurdly long beta. Going into the beta I had the mindset that we should take the beta slowly to have time to discover driver bugs on a wide variety of hardware – why rush and miss something?

I think we took this too far. To run a “slow” beta we have run other development simultaneous to the beta, but that in turn has stretched the beta to epic lengths.

We are starting to try to clamp down and close out the beta now, but it is going to get interrupted again. Austin and I will be traveling to attend the

X-Plane conference in France, and from there we will spend two weeks working with Sergio in Italy. Given how rarely we go to Europe, we cannot pass up the opportunity to work with Sergio in person – we have a few problems in the sim where getting the three of us in one room is the best course of action.

Unfortunately our internet connectivity during the trip will be limited, and we can only bring some of our equipment, so closing out the beta while on the road is really not an option. Thus there will be yet another beta delay. Hopefully when we return, we can close the beta out for good.

Performance Problems

I have seen a number of emails regarding framerate with 930. A few notes on framerate and betas:

I try to save framerate for last in a beta. Most performance problems have two possible causes.

- We communicate with the video card driver in a way that is fast on our systems but astoundingly slow on other systems. We discover this from slow performance in a particular piece of the code on other hardware.

- The new beta does something new that is more expensive than what the old build did, and users have not figured out how to (or do not have a way to) turn this more expensive option off.

The solution to case 1 is to use another driver call; the solution to case 2 is to make sure the rendering options provide a way to turn the feature off. (We simply cannot guarantee that a new, nicer looking feature run without a fps penalty – we can only give you a choice between better visuals and faster fps.)

Either way, framerate work tends to be the last thing on my beta list for this reason: other bug fixes may cause framerate problems, typically in category 1 – that is, a bug fixes makes use of a new driver call that we find out has hurt performance. Thus I try to do all performance fixes at the end of beta when we won’t be adding new code.

This means that in practice, I have spent nearly zero time looking at performance. I am just starting that process this week, so it will be a little bit before I find problems.

Unfortunately often performance problems manifest only in the hardware I do not own – despite having a pile of computers in my office (a pile that seems to grow deeper and less manageable every year) there are just a ton of systems out there. So a lot of the performance bugs will get fixed by users trying experiments and reporting back to me – a slow process despite some of the really great efforts by our users.

Crashes

Crashes sometimes are manifestations of gross code defects, but often they fall into the category of driver problems too. I will be working to piece together the puzzle of strange behavior over the next few weeks; usually the solution is to not do some action that we thought was legal but fails in some hardware cases.

Don’t Panic

As always, my final message regarding the beta is: don’t panic. When it gets quiet over the next few weeks, it is because of travel, and even once Austin and I are back in the office, it will be slightly slow going to piece together problems on hardware other than our own.

Propsman caught something:

…is modifying the value of a batch of ATTR_light_level tris comparable [performance-wise] with toggling the state of a backlit generic instrument? Instinct tells me that you must have the latter more streamlined than the former, but maybe not?

He is right: in the current implementation, ATTR_light_level is probably a bit more expensive than using generic instruments. This may not be true in the future though.

- The generic instrument code is pretty tight.

- Right now ATTR_light_level sometimes has to adjust shaders, which can be expensive.

- In the future, ATTR_light_level has the potential to be very heavily optimized, while the generic instrument code will always be CPU based.

But to put it in perspective, all instrument drawing is slow compared to scenery drawing – in the scenery world we draw 50,000 triangles of identical OpenGL state in a row, and modern cards do that very, very well. In the panel, we have to put in a lot of CPU time to figure out how to draw each quad or tri-strip. Fortunately you probably don’t have 50,000 individually programmed flashing lights in your panel. Heck – there’s “only” 3608 datarefs published by the sim.

Perhaps other questions are important when picking ATTR_light_level vs. panel texture:

- Which is more useful: to be able to have several variant images and variant images that are not “lights” (this is only possible by generics) or the ability to vary the light level gradually and not just have on or off (this is only possible with ATTR_light_level)?

- Which is simpler to author given the rest of the panel?

In other words, it’s all pretty “slow”, but fortunately “slow” isn’t that slow. If your light has to blink, you may want to pick what looks best and is straightforward to author.

I receive a number of requests from authors for an attribute to tag an object as “needs maximum texture resolution” or “needs compression disabled” or “needs maximum anisotropic filtering”. The general idea is that the author wants to ensure a viewing environment that looks good.

For the most part, I am against these ideas – think of the two cases:

- If the attribute on the content is request for a relative improvement in resolution (e.g. set my object to one texture res higher than the rest of the world) then what we’ll have is an arms race – every author will set their content with this flag, and the result will be that the entire sim tends to run at one res setting higher than expected. The result: users without enough VRAM will turn their res settings down another notch and all the content will look like it did before.

- If the attribute on the content is a request for an absolute setting (e.g. load this texture at the highest resolution possible) some content will simply not run on some computers that do run X-Plane.

My general point is this: users run X-Plane with texture resolution, anisotropic filtering, and compression set to lower settings for a reason – because their hardware isn’t very fast! Forcing the sim to ignore the settings and run at a higher res won’t make the user’s video card any better – it will just take the framerate vs. visual quality tradeoff out of the hands of the user.

That’s a simplification of the issue – in fact I am sympathetic to the notion of differential settings – that is, we need to use more texture resolution for art elements that are closer to the viewer. The sim already improves airplane resolution a bit and cockpit resolution a lot. We set anisotropic filtering a bit higher on runways because they are viewed from a shallow view angle pretty much all the time during normal flight.

At this point I am looking at some more specific overrides for cockpit objects. In particular, modern cockpits are built out of many attached objects, and not just the “cockpit object” itself – reducing the resolution of these objects can make cockpit labels illegible.

If we do get extensions to improve resolution I can only say this: use them very, very sparingly! Adding the extension doesn’t improve the user’s hardware. If the user had the ability to run your airplane at extreme res without compression and 16x anisotropic filtering, he’d already be doing that!

As of X-Plane 9, life was simple: ATTR_cockpit and ATTR_cockpit_region caused your triangles to be textured by the panel, and they could be clicked. ATTR_no_cockpit went back to regular texture and no clicking.

Well, it turns out that secretly ATTR_cockpit was two attributes jammed into one:

- Panel texture – that is, changing the texture from the object texture to the panel texture.

- Panel clickability – that is, mouse clicks are sent to the 2-d panel and act on those instruments.

With X-Plane 920 and the manipulator commands, this “clickability” aspect is revealed as a separate attribute, e.g. ATTR_manip_none sets no clickability, and ATTR_manip_command makes a command be run when the triangle is clicked. These attributes can be applied to any kind of texture – panel texture or object texture.

So how does ATTR_cockpit work in this context? Basically you can think of ATTR_cockpit as two “hidden” attributes:

ATTR_texture_panel

ATTR_manip_panel

and similarly, ATTR_no_cockpit is likeATTR_texture_object

ATTR_manip_none

With this you can actually get any number of combinations of attributes, but the code is sometimes unexpected. In particular: if you want a manipulator other than the panel or none, you have to specify it again. Example:# set command manip

ATTR_manip_command hand sim/operation/pause Pause

TRIS 0 3

ATTR_cockpit

# we now have to reset the cmd manipulator!

ATTR_manip_command hand sim/operation/pause Pause

TRIS 3 3

ATTR_no_cockpit

# we have to reset the cmd manipulator again!

ATTR_manip_command hand sim/operation/pause Pause

TRIS 6 3

Similarly, if you want the panel manipulator, you may have to reset the cockpit!ATTR_cockpit

TRIS 0 3

# now make the mesh not clickable

ATTR_manip_none

TRIS 3 3

# Mesh clickable again

ATTR_cockpit

TRIS 6 3

The good news is: this isn’t nearly as wasteful as it seems. X-Plane’s object attribute optimizer is smart enough that it will remove the unnecessary attributes in both cases. In the first one, what you end up with is one manipulator change (to the command manipulator), and the panel texture change is done without changing manipulator state at all. In the second case, you end up with the manipulator change, but the panel texture is kept loaded the whole time.

In other words, even though the double-attributes or duplicate attrbibutes might seem to be inefficient, the optimizer will fix them for you.

One reason you might care: the cost of panel texture is one-time – that is, you pay for the size of the panel texture once per frame. But the cost of manipulatable triangles is per-triangle! So having more is bad. With ATTR_manip_none, you can use the panel texture but not have it be clickable, which can be a big performance win.

930 will handle manipulatable triangles a lot faster than 920 — but that’s still not a good reason to have all of your triangles be clickable!

This article is still

unfinished, but I am trying to put together some info on how to detect performance problems like too many clickable triangles.

There are two ways to make 3-d instruments in your 3-d cockpit:

- Create 2-d instruments on a panel and use the “panel texture” (ATTR_cockpit or ATTR_cockpit_region in your OBJ) to show those 2-d instruments in the 3-d cockpit.

- Model the instruments in 3-d using animation.

So…which gives better framerate? Well, it turns out that they are actually almost the same…a few details:

- If your card can’t directly render-to-texture, there is an extra step for the panel texture. But that would be a weird case – all modern cards can render directly to textures unless you have hosed drivers.

- For very small amounts of geometry, there’s pretty much no difference between rotating a needle using the CPU and telling the GPU to do it by changing the coordinate system.

- The panel texture does put pressure on VRAM – if you’ve had to go to a 2048×2048 panel texture to have enough space, it’s going to hurt you.

Both approaches are actually quite inefficient – you get best vertex throughput on the card when you have at least 100 vertices per batch. But if a panel has 800 batches, you don’t necessarily want to do this – you’d pick up 80,000 vertices just trying to “utilize” the graphics card. That’s not a huge number, but it’s big enough to consider. Panels have enough moving parts that they’re going to push the CPU more than the GPU.

A number of authors like the 3-d approach because they are more comfortable with 3-d tools, and because it can look sharper (since there is no intermediate limiting texture resolution).

There is only one case where I would advise against the 3-d approach: if it takes a huge number of animation commands to accomplish what can be done in one generic, use the panel texture; the generic instruments are all coded cleanly and none of them take that much CPU power. But some of them produce effects that would be relatively difficult to reproduce with animation.