It’s been a tough year for X-Plane authors. Key framing, manipulators, global lighting, baked lighting control, generic instruments, normal maps…version 9 has extended the possibilities for airplanes in a huge way. This is great for X-Plane users, and great for any author who wants to push the envelope, but there is a flip side: the time investment to make a “cutting edge” X-Plane aircraft has gone way, way up.

Here’s the problem: as hardware has been getting faster, the amount of data (in the form of detailed airplane models) needed to keep the hardware running at max has gone up. But the process of modeling an airplane hasn’t gotten any more efficient; all of that 3-d detail simply needs to be drawn, UV mapped and textured. Simply put, NVidia and ATI are making faster GPUs but not faster humans.

That’s why I was so excited about order independent transparency. This is a case where new graphics hardware and nicer looking hardware means less authoring work, not more. (The misery of trying to carefully manage ordered one sided geometry will simply be replaced by enabling the effect.)

My Daddy Can Beat Up Your Daddy

Cameron was on FSBreak last week last week discussing the new CRJ…the discussion touched on a question that gets kicked around the forums a lot these days: which allows authors to more realistically simulate a particular airplane…X-Plane 9 or FSX.

This debate is, to be blunt, completely moot. Both FS X and X-Plane contain powerful enough add-on systems that an author can do pretty much anything desired, including replacing the entire host simulation engine. At that point, the question is not “which can do more” because both can do more than any group of humans will ever produce. As Cameron observed, we’ve reached a point where the simulator doesn’t hold the author back, at least when it comes to systems modeling.

(It might be reasonable to ask: which simulator makes it easier to simulate a given aircraft, but given the tendency to replace the simulator’s built-in systems on both platforms, it appears the state of the art has gone significantly past built-in sim capabilities.)

Graphic Leverage

When it comes to systems modeling, the ability to put custom code into X-Plane or FS X allows authors to go significantly beyond the scope of the original sim. When it comes to graphics, however, authors on both platforms are constrained to what the sim’s native rendering engine can actually draw.

So if there’s a challenge to flight simulation next year, I think it is this: for next-generation flight simulators to act as amplifiers for the art content that humans build, rather than as engines that consume it as fuel. The simulator features that get our attention next year can’t just be the ability for an author to create something very nice (we’re already there), rather it needs to be the ability to make what authors make look even better.

(This doesn’t mean that I think that the platforms for building third party “stuff” are complete. Rather, I think it means that we have to carefully consider the amount of input labor it takes to get an output effect.)

I have two fundamental “rants” about flight simulator scenery, and the way people discuss it, market it, compare it, and evaluate it. The rants basically go like this:

- Mesh resolution (that is, the spacing between elevation points in a mesh) is a crude way to measure the quality of a mesh. It is horribly inefficient to use 5m triangles to cover a flat plateau just because you need them for some cliffs.

- At some point, the data in a very high mesh becomes misleading. You have a 5m mesh. Great! Are you measuring a 5m change in elevation, or is that a parked car that has been included in the surface?

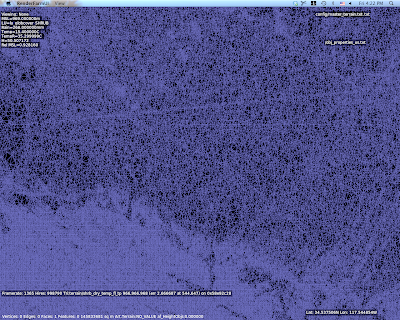

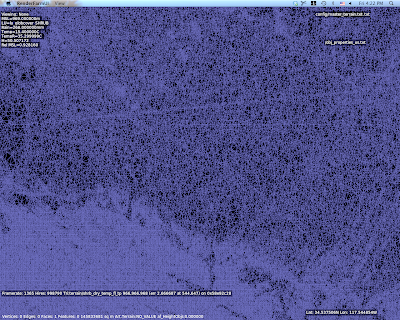

X-Plane uses an irregular mesh to efficiently use small triangles only where they are needed. I have some pictures on this here.

But it brings up the question: how good is a mesh? If you make a base mesh with MeshTool using a 10m input DEM (the largest DEM you can use right now), the smallest triangles might be 10m. But the quality of the mesh is really determined by the mesh’s “point budget” – that is, the number of points MeshTool was allowed to add to minimize error.

MeshTool beta 4 will finally provide authors with some tools to understand this: it will print out the “mesh statistics” – that is, a measure of the error between the original input DEM and the triangulation. Often the error* from using only 1/6th of the triangles from the original DEM might be as little as 1 meter.

I spent yesterday looking at the error metrics of the meshes MeshTool creates. I figured if I’m going to show everyone how much error their mesh has with a stats printout, I’d better make sure the stats aren’t terrible! After some debugging, I found a few things:

-

Vector features induce a lot of “error” from a metrics standpoint. Basically when you induce vector features, you limit MeshTool’s ability to put vertices where the need to be to reduce meshing error. The mesh is still quite good even with vectors, but if you could see where the error is coming from, the vast majority will be at vector edges.

For example, in San Diego the vector water is sometimes not quite in the flat part of the DEM, and the result is an artificial flattening of a water triangle that overlaps a few posts of land. If that land is fairly steep (e.g. it gains 10+ meters of elevation right off the coast) we’ll pick up a case where our “worst” mesh error is 10+ meters. The standard deviation will be

-

The whole question of how we measure error must be examined. My normal metric is “vertical” error – for a given point, how much is the elevation different. But we can also look at “distance” error: for a given point, how close is the nearest mesh point from the ideal DEM?

“Distance” mesh point gives us lower error statistics. The reason is because when we have a steep cliff, a very slight lateral offset of a triangle results in a huge vertical error, since moving 1m to the right might drop us 20 meters down. But…do we care about this error? If the effective result is the same cliff, offset laterally by 1m, it’s probably more reasonable to say we have “1m lateral error” than to say we have “20m vertical error”. In other words, small lateral errors become huge vertical errors around cliffs.

Absolute distance metrics take care of that by simply measuring the two cliff surfaces against each other at the actual orientation of the cliff. That is, cliff walls are measured laterally and the cliff floor is measured vertically. I think it’s a more reasonable way to measure error. One possible exception: for a landing area, we really want to know the vertical error, because we want the plane to touch pavement at just the right time. But since airplane landing areas tend to be flat, distance measurement becomes a vertical measurement anyway.

Unnatural Terrain

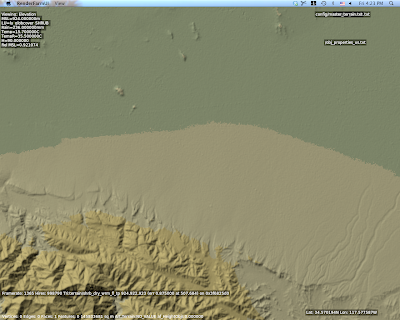

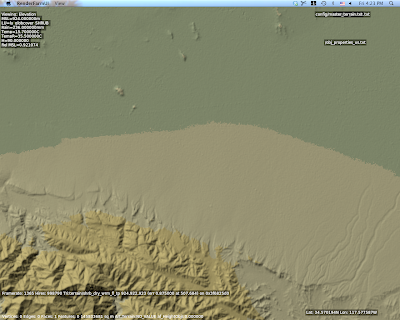

So there I am working with a void-filled SRTM DEM for KSBD. I have cranked the mesh to 500,000 points to measure the error (which is very low, btw…worst error 3m and standard deviation less than 15 cm.

But what are those horziontal lines of high density mesh?

I wasn’t sure what those were, but they looked way too flat and regular. So I look at the original DEM and I saw this:

Ah – there are ridges in the actual DEM. Well that’s weird. What the heck could that be?

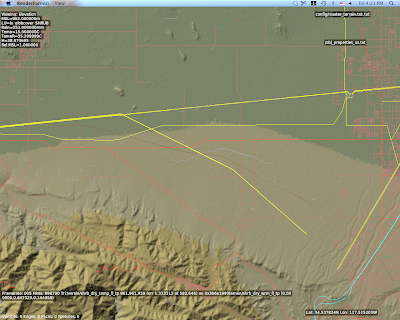

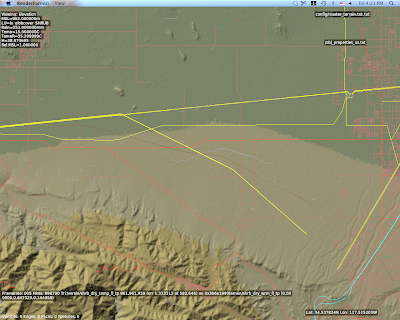

This is a view with vector data – and there you go. Those are power lines.

The problem in particular is that SRTM data is “first return” – that is, it is a measurement of the first thing the radar bounces off of from space. Thus SRTM includes trees, some large buildings, sky scrapers, and all sorts of other gunk we might not want. A mesh in a flight simulator usually represents “the ground”, but using first return data means that our ground is going to have a bump any time there is something fairly large on that ground. The higher the mesh res, and the lower the mesh error, the more of this real world 3-d coverage gets burned into the mesh.

So Do We Really Care About 5m DEMs?

The answer is actually yes, yes we do, but maybe not for the most obvious reasons.

The problem with raster DEMs (that is, an elevation as a 2-d grid of heights) is that it doesn’t handle cliffs very well. A raster DEM cannot, by its very format, handle a truly vertical cliff. In fact, the maximum slope it can create is based on

arctan(cliff height / DEM spacing)

Which is math to say: the tighter your DEM spacing, the higher the maximum slope we can represent for a given cliff at a certain height. Note that the total cliff height matters too, so even a crude 90m DEM like SRTM can represent a canyon if it’s really huge**, but we need a very high res DEM to get shorter vertical surfaces.

So the moderated version of my rant goes something like this:

- High res DEMs input DEMs are necessary to represent small terrain features that are steep if we are using raster DEMs.

- High res meshes are not necessary – we only need res for part of the mesh where it counts.

- Let’s not use mesh res to represent 3-d on the ground, only the ground itself.

There is another way to deal with elevation besides DEMs, and in fact it is used for LIDAR data (where the resolution is so high that a raster DEM would be unusable): you can represent a as a series of vector contour lines in 3-d. The beauty of contour lines is that they represent cliffs no matter how steep (up to vertical), and you don’t need a lot of storage if the ground is not very intricate.

The meshing data format inside MeshTool could probably be made t

o work with contours, but I haven’t seen anyone with high quality contour data yet. We’ll probably support such a feature some day.

* Really this should be “the additional error”, because when you get a DEM, it already has error – that is, the technique for creating the DEM will have some error vs. the real world. For example, if I remember right (and I probably do not) 90% of SRTM data points fall within 8m vertically of the real world values. So add MeshTool and you might be increasing the error from 8m to 9-10m, that is, a 12-25% increase in error.

** For the SRTM this might be a moot point – the SRTM has a maximum cliff slope in certain directions defined by the relationship between the shuttle’s orbit and the latitude of the area being scanned. The maximum cliff at any point in the SRTM is 70 degrees, which can be represented by a 247 m cliff using a pair of 90m posts.

This is getting embarrassing – I’m at risk of getting shut out. I was able to fix the “Null texture, how” error users were seeing on NVidia hardware.

It turns out it was an uninitialized variable in code that was never used until NV changed their drivers. As far as I can tell, NV dropped support for FSAA in 16-bit mode a few months ago, at least on some of their newer GPUs. (It is also possible that the incantation necessary to get FSAA has changed a lot and I simply don’t know what it is.)

So the dialog between X-Plane and the video card ran something like the Monty Python cheese shop sketch:

X-Plane: So … can you do full screen anti-aliasing?

GeForce 8: Oh yes, of course! (Please, I’m a GeForce 8 card.)

X-Plane: Splendid! So…how about 16x FSAA?

GeForce 8: Sorry, can’t I can’t do that.

X-Plane: Ah. How about 8x FSAA?

GeForce 8: Sorry, can’t do that either.

X-Plane: I see. Well then, how about 4x FSAA?

GeForce 8: Nope.

X-Plane: 2x FSAA?

GeForce 8: No way.

X-Plane: Ah. I see.

At this point in the dialog X-Plane would promptly lose track of what it had been doing in the setup process, throw out its notes on the GPU setup, and then freakout a bit later when it realized its note taking left something to be desired.

This is the first case I’ve hit where a video card advertises FSAA and can’t actually do it.

Anyway, if you have hit this bug:

-

Update to 941 final – it should fix it.

-

Stop trying to run with FSAA and 16-bit color. This is a somewhat crazy combination. FSAA attempts to clean up rendering artifacts at the cost of fill rate. 16-bit color creates artifacts to save fill rate. If your GPU needs 16-bit color to run at high framerate, it’s time to turn FSAA off.

(I realize that 16-bit color and aliasing are different kinds of artifacts, and some users might prefer harsh color transitions to harsh polygon transitions. But I still say, go for 32-bit color, no FSAA. When the sim is running in 16-bit mode, a good chunk of the sim still runs in 32-bit mode because 16-bit RGB surfaces only have 1 bit of alpha.* So you’re not quite getting universal savings but you get 16-bit output colors, so the results look universally bad.)

*This assumes 5551, or 565 pixels. There is a 4-bit alpha 16-bit color format, cleverly called 4444, but if you thought 16-bit looks bad…

Identifying an underpowered video card is difficult. Video cards have simple, non-confusing names like the CryoTek GeForce FX 9999 XYZ. What the heck does all that stuff mean?

(The lists below will contain a number of “specs”. Do not panic! At the end I will show you where to look this stuff up on Wikipedia.)

A modern graphics card is basically a computer on a board, and such, it has the following components that you might care about for performance:

-

VRAM. This is one of the simplest ones to understand. VRAM is the RAM on the graphics card itself. VRAM affects performance in a fairly binary way – either you have enough or your framerate goes way down. You can always get by with less by turning texture resolution down, but of course then X-Plane looks a lot worse.

How much VRAM do you need? It depends on how many add-ons you use. I’d get at least 256 MB – VRAM has become fairly cheap. You might want a lot more if you use a lot of add-ons with detailed textures. But don’t expect adding more VRAM to improve framerate – you’re just avoiding a shortage-induced fog-fest here.

-

Graphics Bus. The GPU is connected to your computer by the graphics bus, and if that connection isn’t fast enough, it slows everything down. But this isn’t really a huge factor in picking a GPU, because your graphics bus is part of your motherboard . You need to buy a GPU that matches your motherboard, and the GPU will slow down if it has to.

-

Memory Bus. This is one that gets overlooked – a GPU is connected to its own internal memory (VRAM) by a memory bus, and that memory bus controls how fast the GPU can really go. If the GPU can’t suck data from VRAM fast enough, you’ll have a slow-down.

Evaluating the quality of the internal memory bus of a graphics card is beyond what I can provide as “buying advice”. Fortunately, the speed of the bus is usually paired with the speed of the GPU itself. That is, you don’t usually need to worry that the GPU was really fast but its bus was too slow. So what we need to do is pick a GPU, and the bus that comes with it should be decent.

-

Of course the GPU sits on the graphics card. The GPU is the “CPU” of the graphics card, and is a complex enough subject to start a new bullet list. (As if I wouldn’t start a new bullet list just because I can.)

So to summarize, you want to look at how much VRAM your card has and make sure the bus interface matches your motherboard. What about the GPU? There are three things to pay attention to on a GPU:

-

Generation. Each generation of GPUs is superior to the previous generation. Usually the GPUs can create new effects, and often they can create old effects more cheaply.

The generation is usually specified in the leading number, E.g. a GeForce 7xxx is from the GeForce 7 series, and the GeForce 8xxx is from the GeForce 8 series. You almost never want to buy a last-generation GPU if you can get a current generation GPU for similar price.

-

Clock Speed. A GPU has an internal clock, and faster is better. The benefit of clock speed is linear – that is, if you have the same GPU at 450 mhz and 600 mhz, the 600 mhz one will provide about 33% more throughput , usually.

Most of the time, the clock speed differences are represented by that ridiculous alphabet soup of letters at the end of the card. So for example, the difference between A GeForce 7900 GT and a GeForce 7900 GTO is clock speed – the GT runs at 450 mhz and the GTO at 650 mhz.*

-

Core Configuration. This is where things get tricky. For any given generation, the different card models will have some of their pixel shaders removed. This is called “core configuration”. Basically GPUs are fast because they have more than one of all of the parts they need to draw (pixel shaders, etc.) and in computer graphics, many hands make light work. The core configuration is a measure of just how many hands your graphics card has.

Core configuration usually varies with the model number, e.g. an 8800 has 96-128 shaders, whereas an 8600 has 32 shaders, and an 8500 has 16 shaders. In some cases the suffix matters too.

How would you ever know your core configuration, clock speed, etc.? Fortunately Wikipedia is the source of all knowledge. Here are the tables for NVidia and ATI.

Important: You cannot compare clock speed or core configuration between different generations of GPU or different vendors! A 16-shader 400 mhz GeForce 6 series is not the same as a 16-shader 400 mhz GeForce 7 series card. The GPU designers make serious changes to the card capabilities between generations, so the stats don’t apply.

You can see this in the core configuration column – the number of different parts they measure changes! For example, starting with the GeForce 8, NVidia gave up on vertex shaders entirely and started building “unified shaders”. Apples to oranges…

Don’t Be Two Steps Down

This is my rule of thumb for buying a graphics card: don’t be two steps down. Here’s what I mean:

The most expensive, fanciest cards for a given generation will have the most shaders in their core config, and represent the fastest that generation of GPU will ever be. The lower models then have significantly less shaders.

Behind the scenes, what happens (more or less) is: NVidia and ATI test all of their chips. If all 128 shaders on a GeForce 8 GPU work, the GPU is labeled “GeForce 8800” and you pay top dollar. But what if there are defects and only some of the shaders work? No problem. NV disables the broken shaders – in fact, they disable so many shaders that you only have 32 and a “GeForce 8600” is born.

Believe me: this is a good thing. This is a huge improvement over the old days when low-end GPUs were totally separate designs and couldn’t even run the same effects. (Anyone remember the GeForce 4 Ti and Mx?) Having “partial yield” on a chip set is a normal part of microchip design; being able to recycle partially effective chips means NV and ATI can sell more of the chips they create, and thus it brings the cost of goods down. We wouldn’t be able to get a low end card so cheaply if they couldn’t reuse the high-end parts.

But here’s the rub: some of these low end cards are not meant for X-Plane users, and if you end up with one, your framerate will suffer. Many hands make light work when rendering a frame. I you have too few shaders, it’s not enough hands, drawing takes forever, your framerate suffers.

For a long time the X-Plane community was insulated from this, because X-Plane didn’t push a lot of work to the GPU. But this has changed over the version 9 run – some of those options, like reflective water, per-pixel lighting, etc. start to finally put some work on the GPU, hitting framerate. If you have a GeForce 8300 GS, you do not have a good graphics card. But you might not have realized it until you had the rendering options to really test it out.

So, “two steps down”. My buying advice is: do not buy a card where the core configuration has been cut down more than once. In the GeForce 8 series, you’ll see the 8800 with 96-128 shaders, then the first “cut” is the 8600 with 32 shaders, and then the 8500 brings us down to 16.

A GeForce 8800 was a good card. The 8600 was decent for the money. But the 8500 is simply underpowered.

When you look at prices, I think you’ll find the cost savings to be “two steps down” is not a lot of money. But the performance hit can be quite significant. Remember, the lowest end cards are being targeted at users who will check their email, watch some web videos, and that’s about it. The cards are powerful enough to run the operating sytem’s window manager effects

, but they’re not meant to run a flight simulator with all of the options turned on.

If you do have a “two step” card, the following things can help reduce GPU load:

- Turn down or off full screen anti-aliasing.

- Turn off per pixel lighting, or even turn off shaders entirely.

- Reduce screen size.

* GT = Good Times, GTO = Good Times Overclocked? Only NVidia knows for sure…

Beta users, see the bottom of this post for how scalability turns into possible bugs.

In computer science, a program or architecture is scalable if it doesn’t totally vomit up a lung as its constituent parts become bigger. For a cleaner definition, see Wikipedia, source of all internet knowledge.

An ant is not scalable – if you made an ant 100 times larger in every dimension, its tiny legs would break under its new weight. (An ant is not scalable because its weight grows faster than its structural strength. Thus elephants are not built like ants.) Geeks: scalability is to computer science as marginal cost is to economics.

Before X-Plane 940, the apt.dat file was distinctly not scalable. The entire file was loaded into memory; as users created more and more taxiway lines and signs and details, we simply used more and more memory. This approach isn’t very scalable because authors have the potential to grow the apt.dat file faster than our system requirements can increase.

X-Plane 940 fixes this by not loading the entire apt.dat file into memory. Instead, only essential airport information is loaded into memory, along with a note as to where in the file the airport lives. Whenever an airport actually has to be built into a 3-d mesh while you fly, we go back to the apt. file and load the rest of the data for the one airport we are building, use it, and throw it out. Since 3-d airport meshes are built on a second core, the cost of loading one airport off disk is pretty harmless.

The problem with this fix is that it introduced a new scalability problem. Consider:

- Meshes in 940 are built on as many CPU cores as you have – some users have 8!

- Each CPU core could be working on a different airport, depending on how many are nearby.

- Each airport has to load up the apt.dat file to get the extra airport data.

This means that at times on an 8 core machine we could easily have allocated 50 * 8 = 400 MB of memory just to temporarily hold 8 copies of the apt.dat file.*

This is of course completely silly – there’s no reason to load the whole apt.dat file to get one airport, and the fix that is going into beta 8 lets the airport loader surgically grab just one airport. Thus we will be scalable again, because adding more cores won’t cause memory usage to go up.

Beta Users: Please keep an eye out for X-Plane running out of memory – if it starts to do so in the next beta it means that some part of this code change munged memory management. We’re running stress tests on the sim now, but touching the low level memory nd file handling code late in beta isn’t something I like to do.

* While loading 8 copies of the apt.dat file is wasteful of memory, it is not slow; X-Plane uses memory mapped file I/O, so reading a small part of a large file is very fast – just not very virtual-memory efficient.

I’m driving at 55 mph on the highway. I drive over a nail, lose a tire, skid off the road, crash through the guard rail, plunge off a cliff, and die. That’s not much fun. But when you get to the accident site, you’ll probably be able to piece together what happened. The skid marks, the nail, the hole in the guard rail, the car wreckage below – you can connect the dots.

Now…let’s say I’m driving 500 mph. Same nail, same out of control crash. But this time it’s going to be a lot harder to tell what happened. Lord knows where the nail ends up, the distance from the nail strike to exiting the highway is going to be a lot bigger, and the car is going to be in smaller pieces scattered over a wider distance. It’s going to take a lot longer to piece together what went wrong.

That, in a nutshell, describes the motivation for an X-Plane beta with all of the debug and safety checks on. X-Plane’s normal operation is like the car doing 500 mph – when it crashes and dies, there’s very little left that can be used to figure out what went wrong. When there is a bug in the code that destabilizes the sim, finding it via crashes in release builds takes a lot of developer time and slows the whole beta down.

With the safety checks on, X-Plane still crashes when something goes wrong – but the bodies and wreckage are all a lot closer to the scene of the crime, and the evidence left around is in much better shape.

One of the side effects of the safety builds is that they catch “harmless” coding mistakes – (harmless in quotes – the bug might seem harmless but who knows if that will always be true). XSquawkBox now quits the sim with an ugly alert box because it reads off the end of a piece of the airplane data structure via the plugin system. This hasn’t hurt things in the past, but it’s not really correct. Beta 5 should fix the underlying problem, letting you run XSquawkBox again. (The fix will be in X-Plane, not XSquawkBox.)

I’m seeing a number of bug reports where weird artifacts are showing up in 940…missing pieces of runway, flickering triangles…all sorts of good stuff!

I believe that this is due to some kind of bug relating to threading, X-Plane and the video drivers. I won’t say whose fault it is because I really don’t know. I do know that the bug appears to not happen on OS X. (But this could simply be because the threads time out differently on OS X.)

The changes to the rendering engine for 940 from 930 are substantial and aggressive – it’ll take us a little bit to fix these things.

When you wonder how come programs don’t use all 8 of your cores yet, well…this is why…multi-core programming is complex, tricky, tedious to debug, and often involves substantial changes from the original code.

X-Plane 940 beta 1 is out…it will take a little bit to get the release notes and docs on the website completely up-to-date. We’re still dealing with loose ends from our migration to the new web site, and most of my office is packed up for a move to the Boston area. I’ll try to get docs up as fast as I can given the chaos flying about.

Given the interest multi-core stirred up in previous notes, I will mention one change to 940: with this build we’ve added yet more multi-core to X-Plane.

In 931, X-Plane will use as many cores as you have to load textures, but only one to build “3-d scenery” (a loose category for the work we do when we make airport taxiways and lines, build forests, and extrude roads).

In 940, this “3-d scenery” is also done on as many cores as you have. This should speed up load times a bit, particularly under very heavy tree settings, and hopefully keep the forest engine running faster for users with more cores.

It also sets us up for long-term growth; X-Plane’s visual quality is sometimes limited by the time to build 3-d meshes…being able to do this work on many cores means we can use higher quality algorithms.

Consider for example the roads: my original “road extruder” (the code that converts a vector road into a 3-d model, called an extruder because it builds a 3-d road from a cross section like one of those play-dough toys) made beautiful intersections with stop-lines and cross walks and lots of other great stuff.

It was also really slow. And at the time the sim wouldn’t fly at all while roads were extruded, so speed was of primary concern. So I replaced it with the much dumber extruder you see today, where intersections are basically ignored.

Now that we have 3-d scenery build on multiple cores, we can begin to provide rendering options that take more CPU time but produce higher quality results. The trees and airport layouts already do this (in that they take more time and produce slower, more detailed, higher triangle count sceneery at higher rendering setting for the same input DSF ad apt.dat file). With more cores, we can continue this strategy with roads and other parts of the sim without worrying about overloading the one core that was doing this work.

Of course just because we can use 8 cores doesn’t mean we do…you won’t see 8 cores maxed out very often, particularly if you have simple scenery and a very fast machine.

To answer the most basic questions:

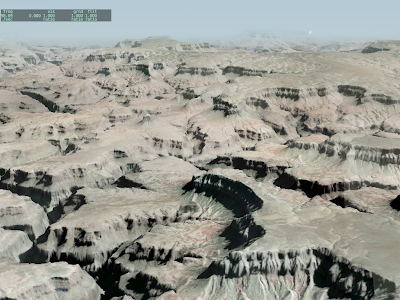

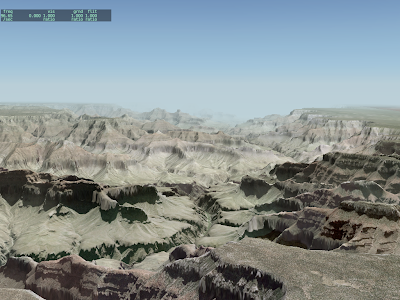

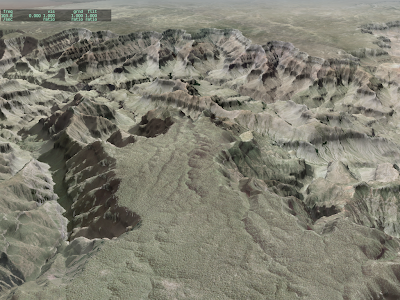

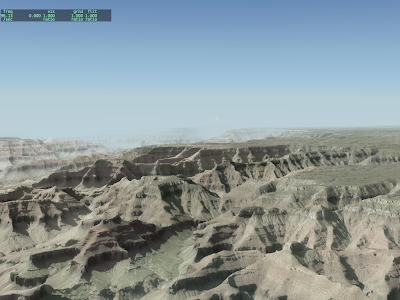

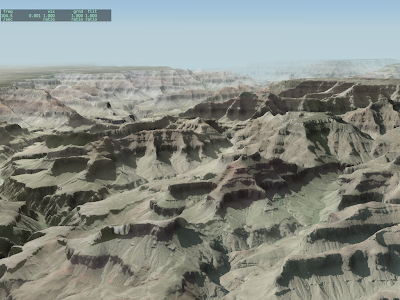

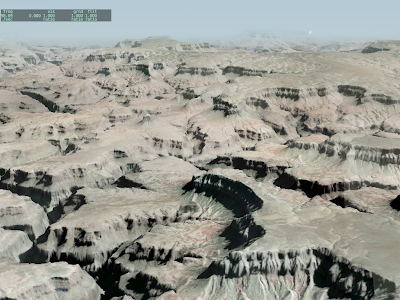

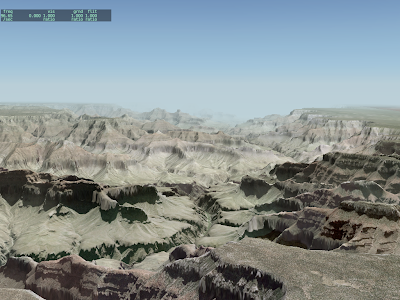

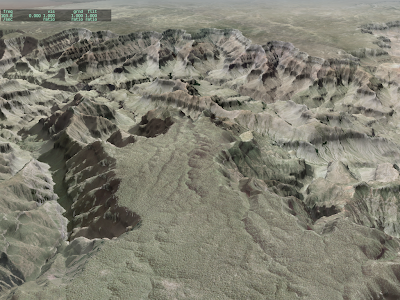

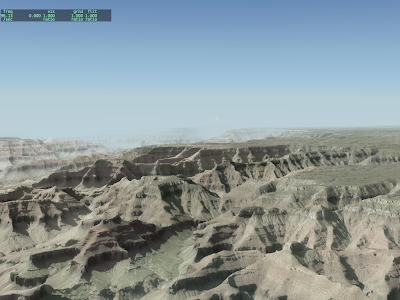

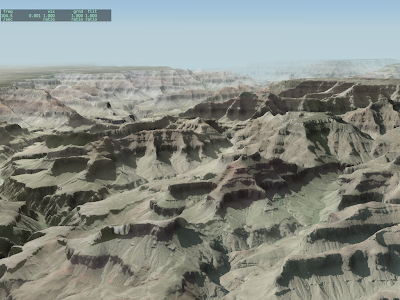

- This is a base mesh orthophoto scenery I made with MeshTool, as a test.

- The source DEM is 10m NED, the source imagery is 1mpp DOQQ, down-scaled to 3mpp. I gave MeshTool a point budget of 500,000 mesh points per DSF tile, and it used them all.

- This version of MeshTool (2.0 beta 2) should be out in the next 3 days.

- That’s X-Plane 930RC4. The framerate really is around 100 fps.

- There are no dynamic real-time shadows. Rather, the orthophotos have the shadows “baked” in because they’re photos.

- There are artifacts at the joins of the orthophotos because I spent time fixing projection errors.

Clearly we need more than 25 nm visibility in some cases!

In the next X-Plane 930 beta (it should post today I think) the rendering settings have two new check-boxes: one to enable the “dynamic” airplane shadow and one to enable per-pixel lighting.

In the last week a number of users emailed me performance numbers via the fps test, and from what I can tell, 99% of performance problems can be attributed to these two new features chewing up resources in a way that 922 did not. When the features are both disabled, from what I can tell, the sim should be as fast or faster than 922.

The new beta will also limits dynamic shadows to your aircraft – beta 13 will calculate a dynamic shadow for every aircraft, which is unacceptably slow when you have a lot of AI planes enabled.

I may still be able to improve the performance of the per-pixel-lighting shader, but fundamentally per-pixel lighting is going to be more expensive than per vertex. The average X-Plane scene might have 200,000 to 500,000 vertices. At absolute minimum resolution, no FSAA, you’re going to have over 700,000 pixels even if there is no “over-draw” – you could easily have 10x that fill rate with only a modest increase in overdraw, full-screen anti-aliasing and window size. Simply put, per-pixel lighting is more work.

Please bear in mind: without per-pixel lighting X-Plane’s pixel shader is extremely simple. If you have a “low-end” card this could give you the illusion of GPU power when there is really not much under the hood.

Examples of low-end cards: GeForce 7300, GeForce 8400, GeForce 9400, Radeon X300, Radeon X1300, Radeon HD2400. All of these cards are the younger brother of a fairly capable card, but with fewer pixel shader units/cores. If each unit is doing very little work, you don’t need that much pixel-filling power…but when we go to a “real” shader, the difference between a GeForce 8400 and 8800 becomes very, very apparent. Simply put, even with optimization the GeForce 7300 (for example) will never run a huge monitor with per pixel lighting and high FSAA.