What Is a Photometric Renderer?

Simply put, a photometric renderer is one that tries to create realism by using actual real world light levels (specified in real physical units) in its internal calculations. In other words, we render the world as it is.

A decade ago, the image of the world you saw through your simulator was essentially built out of pre-made images drawn in Photoshop by artists. These images were drawn as realistically as possible, but they were low dynamic range (LDR) because that’s all the monitor could handle. The sky was as blue as the art director decided, and then created with Photoshop. This worked great in its time, but with today’s modern graphics cards we can do much better.

First We Have to Go Higher

A traditional low dynamic range (LDR) renderer has colors in a range from 0 to 255, but if we want to model the real world, we’re going to need some much bigger numbers. We measure luminance in candela per meter squared (cd/m^2) or “nits” (nt). Here’s a Wikipedia chart listing the luminance of a wide variety of stuff. A few examples:

- Flood lights on buildings at night – 2 nts.

- An old crappy LDR monitor – 80 nts.

- A nice newer LCD monitor – 500 nts.

- The clear sky – 7000 nts.

- Clouds – 10,000 nts.

- The sun at sunset – 600,000 nts

- The sun at noon – 1,600,000,000 nts.

(That last one is why your mother told you not to stare at the sun.)

Note how wide the range of numbers are: daytime images are made up of things in the “thousands” of nts, but with a wide range of variation, while night ones might be single digits.

So to be more realistic, the sim needed to render using bigger numbers. X-Plane 12’s rendering pipeline is entirely HDR, from start to finish, using 16-bit floating point encoding to hold a much wider dynamic range of luminance.

You Can Stare at the Sun in X-Plane

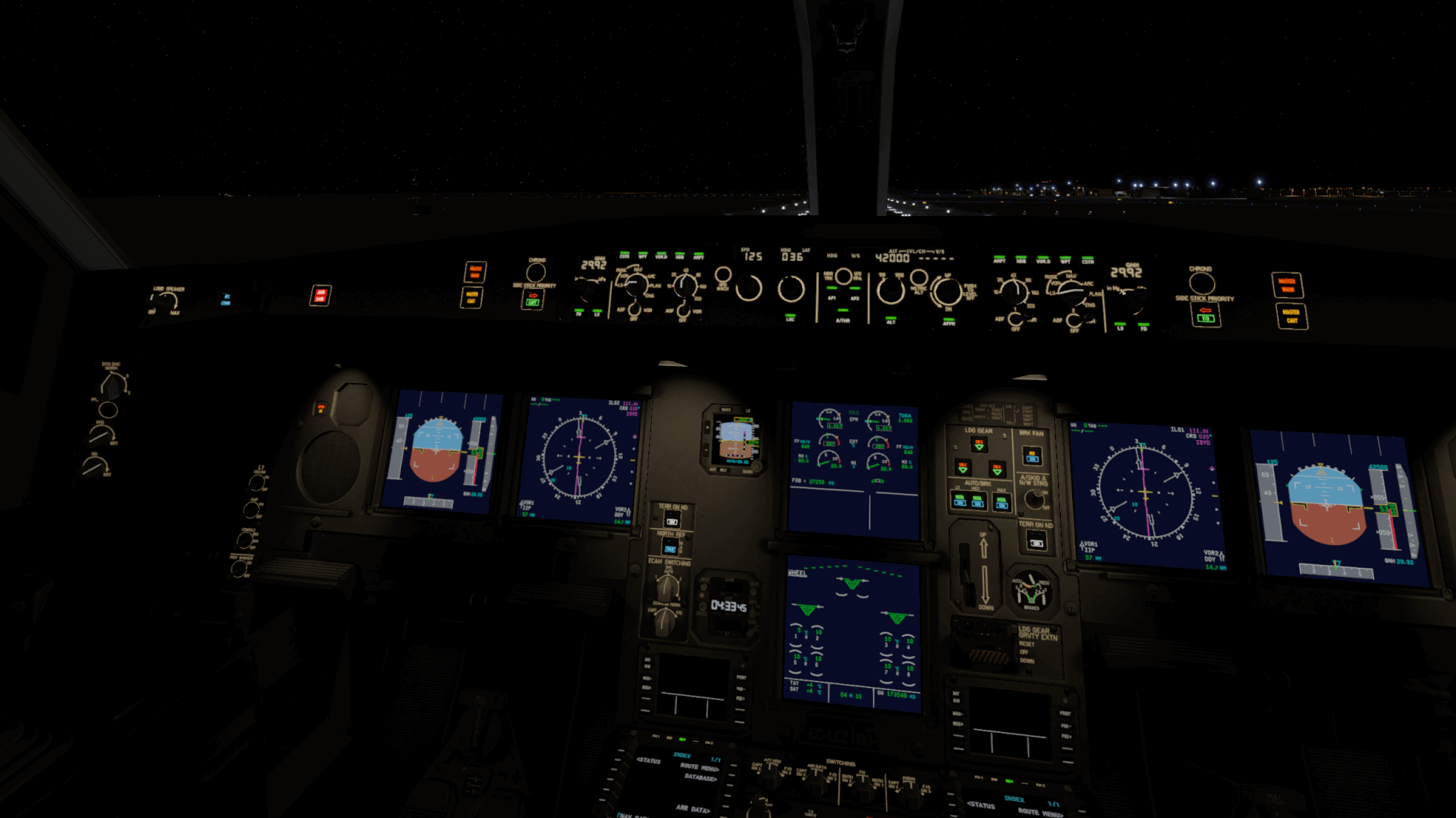

Obviously you can look at the sun in X-Plane on your LCD monitor and not suffer direct eye damage – the peak brightness of your monitor might be 100-500 nts. How do we show a scene with 10x the brightness of a monitor, or more? To solve this, we needed to model a real camera to serve as your “eyes” in the simulator. This camera in X-Plane sets an exposure value that maps our HDR scene to your monitor.

The dynamic range of computer monitors isn’t very large, though. To address that, we applied a tone mapper to the exposed image. The tone mapper is a tool that “squishes” some of the bright areas of the image so that we can fit a wider range of bright colors onto the screen at the same time. The tone mapper can give the simulated scene a look that’s more like an image from a film camera, rather than a cheap shoddy digital camera. Using the tone mapper, our art directors tuned the parameters of X-Plane’s camera to make our scene look brilliant and realistic, given the constraints of computer monitors.

The exposure levels in X-Plane 12 are set by our art team according to time and weather conditions. They are also modulated by auto-exposure, so that as you look around the scene the camera becomes more sensitive in dark areas (to help read panels) and less sensitive in bright areas (to avoid being blinded).

A Physical Sky

Now that the sim had a rendering that accurately modeled the real world, a sky that was painted by our art team using Photoshop just wouldn’t work. It needed a new sky that would match the light values (nits) of the real sky.

To do this, we calculated the light levels of the sky by considering the composition of the atmosphere, the viewing angle, and the brightness of the sun. The sky is blue for a reason (oxygen molecules) and we get a blue sky by simulating that scattering effect.

This math for the sky works when looking in any direction from any location, so we not only get a blue sky, but we get the correct “blue-ish” tint when looking at the ground from the air, and this matches the sky without the artists trying to hand-paint two effects to match.

Lighting It Up

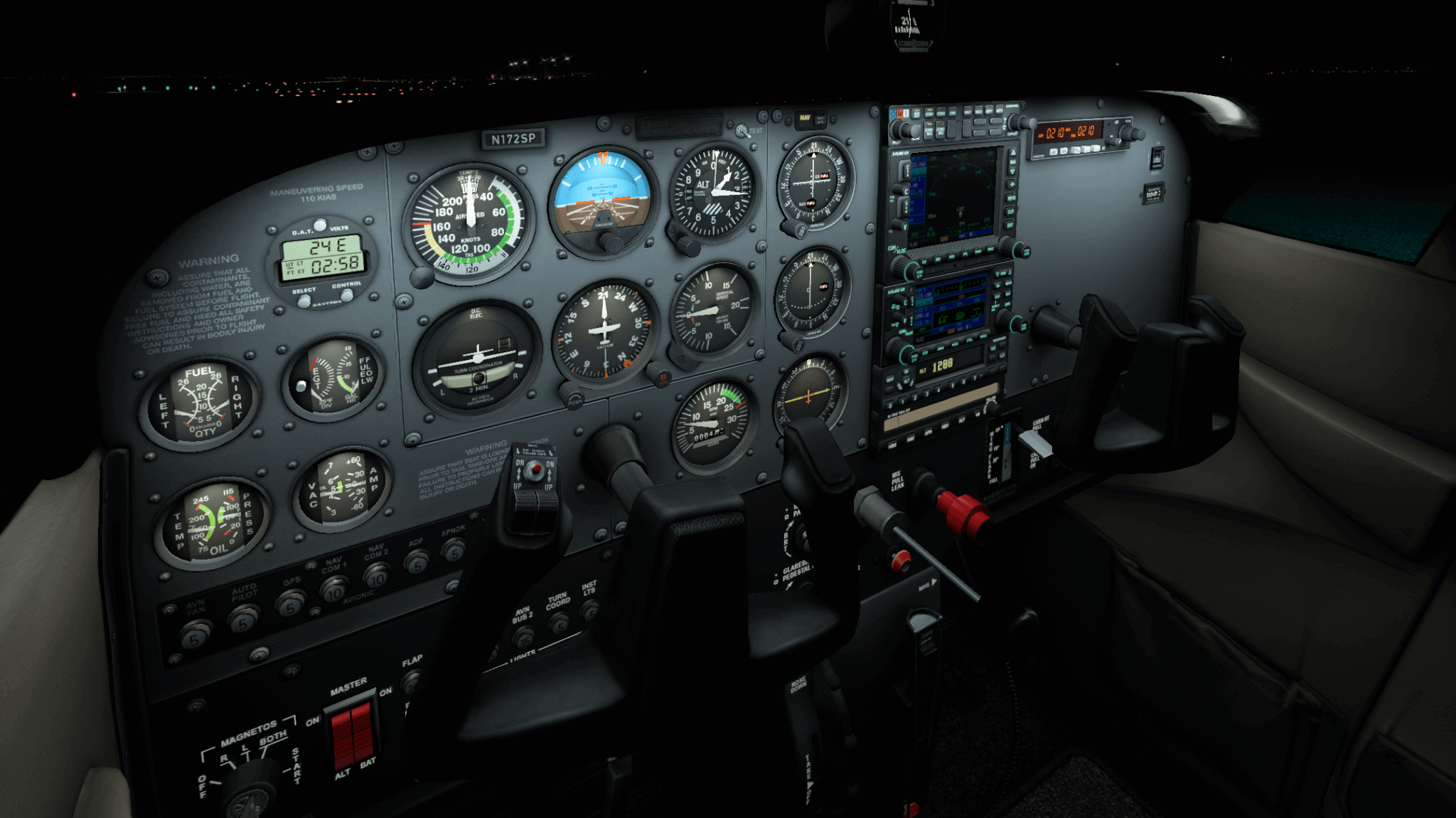

To create a photometric world in X-Plane, we needed light sources in the sim to be specified in real-world units. X-Plane comes pre-programmed with the brightness of the sun, but how bright is that LCD screen in your glass cockpit? In X-Plane 12, aircraft designers specify these values in real world units.

One of the advantages of this real world approach is that the “right” value for setting up an aircraft can come from the real specifications of the aircraft, rather than tuning some numbers in a 3D editor until it looks right.

Harmonized Results

One of the big advantages of this approach is that all of the elements that make up the sim play well together because they are all calibrated to the same standard – the real world. How bright are landing lights compared to the airport lights? How visible are the taxi lights when the sun comes out? With a photometric rendering engine, the answers are determined mathematically and by measurements that can be checked against real life, so the entire scene fits together.

With photometric rendering, we’ve taken another step closer to real life – the new X-Plane 12 renderer simultaneously produces realistic images and is more straightforward to work with, all thanks to its use of real-world values as inputs. Check out the video below for an A/B comparison between the lighting in X-Plane 11 and X-Plane 12.

Amazing work!

How will this work with modern HDR displays? Do such displays allow for better representation of the rendered world, thanks to their higher maximum brightness?

Down-stream we can add HDR monitor support. Basically the HDR of a monitor is still TINY compared to the HDR of the scene, so there’s still tone mapping and exposure control – but we don’t have to squash the image “as much”.

So e.g. in an LDR mointor, the white from the sun (it’s really bright) is max monitor color but the white from the silver lining of a cloud might be pure white too – we’ve run out of dynamic range. With an HDR monitor, we could let the sun be one of those ‘super bright’ colors (brighter than the standard UI white of the OS) which would make it pop more.

this is the kind of question/answer i was waiting for years and years.

Great Article, and a an unforgettable milestone, we hope HDR monitor support for 10-bit HDR around early days once xp12 is released.

few notes:

Few words of caution:

Better-performing HDR Monitors typically generate at least 600 nits of peak brightness, with top performers hitting 1,000 nits or more (1600 nits such as apple pro display xdr – The first 32-inch Retina 6K display ever. Up to 1600 nits of brightness – a sustained brightness of 1,000 nits. If that’s not bright enough, it peaks at a crazy 1,600 nits, making it the brightest desktop monitor we’ve seen to date and also having an astonishing 1,000,000:1 contrast ratio and super wide viewing angle. Over a billion colors presented with exceptional accuracy. no doubt it is the king of monitors in that area, the world’s best pro display – but is having refresh 60 hz.

But the mainstream HDR monitors offer up to 100 to 300 or 400 nits, which is really not enough to deliver an HDR experience ( at least better than nothing). so better keep an eye watch over the competition in that segment.

i struggle in my projects to doing rendering in 3dsmax – in scenes whereby their is dim lighting – like candles in a cottage, here is a little excercise:

1) create a room, say 4meters by 4meters.

2) create a candle object and assign a light source that is having intensity of two candelas,

3) and create an book-object with a readable text texture on it, like a typical book page, and place the book next to the candle.

start the the rendering with your favorite renderer (arnold/vray/corona render..etc)

notice how poor the rendition and how dark it is.

despite in real-world, one candle appears to create enough light to read the text – but on screen – it seems a much darker environment.

there are many explanations to that, one of them of the color-profile of your monitor that comes as an improper configuration, and also brightness of screen …. do not waste time to increase gamma – as you only would washout the whole scene ….

bottom line — take the decision about the suitable monitor seriously, as the hardware for HDR can make a big difference if coupled with HDR enabled photometric renderer to get the best of results.

As interesting as the approach to attribute lighting effects to the underlying physics is, it should not be forgotten that our visual perception is an interplay of light and the adaptation of our visual apparatus and interpretation of what we see by our brain.

If we walk out of bright sunlight into a room illuminated by a candle, we will most likely not be able to see anything at first, after some time we will be able to see something and after enough time we will be able to read the text without any problems.

Likewise, after some time, the paper illuminated by the candle will no longer be perceived as yellow but as white.

Our eye easily masters the adaptation to different brightnesses in reality.

Is there not to fear that physically exact illumination will be evaluated differently, i.e. wrongly, by the viewer of the screen?

In any case, I find the new approach promising and am already very curious how the new version will look like.

Right – we model camera exposure to simulate “adapting” – but to get REAL adapting to work, if you want to fly x-plane at night, you really need to be full screen in a dark room.

Very impressive. It’s quite an advance since XP11 was first launched.

I seem to remember from photography that light, or at least the appearance, changes with latitude. E.g. you won’t find red/orange sunsets that you see in California in Alaska.

Give it a few more years and more wildfires in Alaska and you too will enjoy your very own Apocalypse Now! filter

Perhaps Austin can apply his psychedelic filter that he used in the San Diego presentation…Legendary!

They won’t say it because its promising a feature they don’t have worked out yet, but this lays the groundwork for EDR (a Mac specific thing for faking HDR on SDR monitors) and HDR render targets.

*swoon*

I am sooooooooo happy.

thats just called 10 bit color on the PC side. already have that.

EDR isn’t just a wide dynamic range (E.g. “10-bit color” with the implication that the white point for the UI should not be the _maximum_ monitor output) – EDR provides feedback to the app from the OS about the _viewing environment_ (as measured by some kind of camera on the machine) about ambient light conditions, so the app can understand the point at which the monitor will run out of real brightness.

In other words: given a monitor that can do 500 nts, in a dark room, that’s a LOT of killing power, and you can get a really vivid HDR image. But if it’s sunny out and my office has a lot of windows, that 500 nts is gonna be good to see my screen but it’s not going to “pop” any more due to all of the background light.

With EDR, as the user turns their screen brightness down and blacks out the windows, the OS can figure out that the user is requesting the UI brightness to be fairly dim in a low light environment, and therefore all of the excess dynamic range of the monitor can be used for “overly bright” stuff – it reports a very high “HDR multiple” of nominal brightness, and we can use this to back off the tone mapping compression and show a more real-world image.

Then the sun comes up and the user turns up the monitor brightness to compensate – the OS can figure out that a lot more of the monitor’s real output is just competing with background noise and there really isn’t much headroom left. That HDR multiple comes way down and we can use that to put in tone mapping that is more compressed and closer to LDR.

When last I checked, the state of the PC was:

– the HDR standard specified a high peak output (in real world terms) but

– lots of monitors couldn’t do the real output and would just tone map internally without telling the app and

– the app doesn’t know the ambient light levels anyway

and therefore the app has no way to know what the hell is actually happening. In this situation all we can do is (1) enable 10-bit HDR output and (2) give the user a manual in-game brightness control and (3) pray.

I don’t think this is accurate. EDR provides extended dynamic range on “real” HDR monitors as well as LDR ones with excess output capability. (Because Apple doesn’t have to tell us what’s going on with the integrated displays on laptops and iMacs, I can’t actually say whether the window manager is running in 8-bit or 10-bit mode when EDR is in use.)

I’m so glad Xplane is getting pretty awesome attentions now.

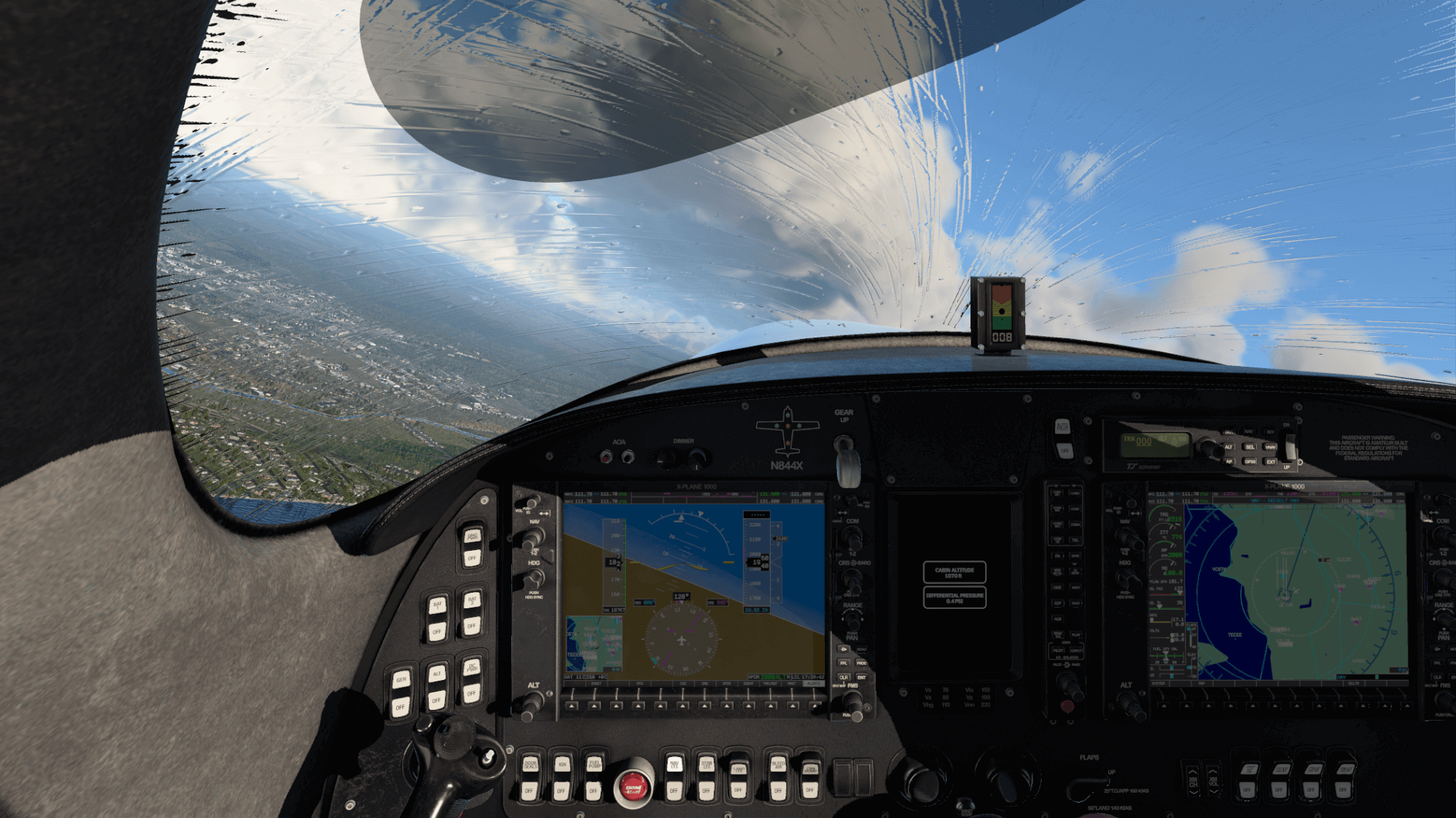

All of that sounds (looks) amazing! Also cant wait to try my all time favorite aircraft, the Lancair Evolution, on XPlane! Happy to see the first screenshot from the Evo’s cockpit 🙂

Amazing progress! Not jealous of MSFS 2020 anymore!

This looks incredible, but how will it look from the air? Will the problem of city lights, and others, just popping on be corrected in XP 12? Will Towns and cities look real at 30K feet instead of lit rectangles on the ground? Personally, I can’t wait to see it!!! I know it’ll be amazing!

Amazing work! Does this mean that aircraft developers won’t need to manually shade areas in the textures?

The transition from “you paint a photo onto the texture” (E.g the very best of XP6) to “you paint the material properties by channel” has been a steady change over several versions and I think was well under way even in XP11.

This all looks really phantastic. I followed every info from Flight Sim Con and all Devblog articles. For me unfortunately XP 11.55 still very often crashes with Vulkan – with XP12 being completely developed with Vulkan will this be significantly improved from the state 11.55 is at now?

There’s a certain advantage to MSFS2020 coming out _before_ the end of the X-Plane 11 run. Looks stunning, Ben…!

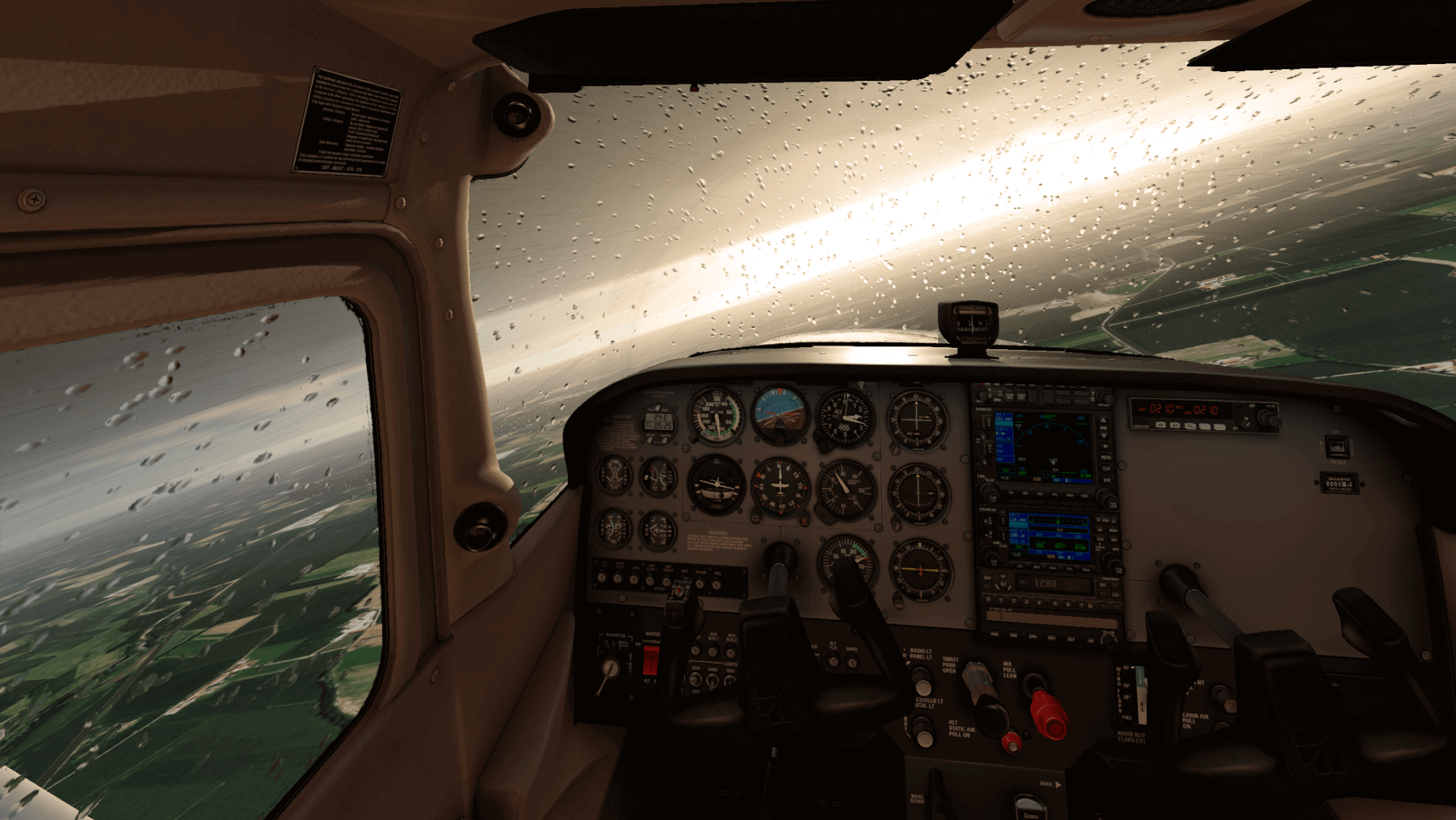

Love the rain effects!

Honestly, the capabilities look very promising, but the explanation is only about lightness. What about color? Do you still set colors in sRBG (e.g.), or do you use XYZ, L*a*b* or Yxy or Yuv now? Agreed, color resolution is not that high, but rescaling sRGB to real-world lightness seems like a bad idea.

What I’m worrying is how much CPU and GPU those 16-bit floats will burn for each pixel. Will it all be crawling slow unless you have a $1000 GPU?

The color space is still sRGB. We don’t have out of gamut material because the source albedos are authored in sRGB and the _mixing_ of colors never pushes us further out of.gamut than the source material. I don’t think wide gamut rendering would be in any way noticeable in a flight sim.

I agree that most textures/materials themselves likely won’t land out of gamut, but won’t materials possibly go out of gamut at dawn/sunset when the light source goes out of gamut?

In a video on Blender’s Filmic rendering, one of the things they talked about is how as brightness goes up, colors drop in saturation ( //youtu.be/m9AT7H4GGrA?t=1328 ) is that effect present in XP12’s new renderer?

Yep – Timothy Lottes calls that the “path to white” in his tone mapping GDC talk. We have an internal control that let the artists control how ‘fast’ we desaturate.

Well, actually I have a sunset shot where the orange tones easily exceed the huge BT2020 color space. I’d like to share, but download links are not wanted here.

I’m impressed you guys went through all of this to fix those dirty grey chem… err, contrails!!! Oh wait, this fixes them, right? ; )

Wow, it is hard to not get hyped for xp12 when seeing these shots. The lighting looks incredible!

Ahn, we need the Cessna picture with the windshield rain from N844X rain picture, s’il vous plaît

Dear Devs,

my I ask: are you regarding the task of putting all the pieces togehter (like this one) for x-plane 12 on a good track or are you facing bigger challanges in the process?

I ask, because I cannot estimate if it makes sense to hope to see some first beta till the end of the year or instead just keep calm for another year before getting excited.

Would you please give us / could you estimate a rough time horizont?

thank you and kind regards

Steve

How will this new light rendering affect ultiraviolet transmission like the various stages of light and heat sensorics?

means, What can we expect for Night Vision Goggles and FLIR?

//up.picr.de/42378981kx.png

We are not modeling out-of-band UV/IR – it’s too niche to eat the cost of a true spectral renderer. We can do a better night vision effect with the new pipeline probably.

Love it!! Art imitating life is definitely the way to go!!

I was too late to comment on the scenery RFC, but it seemed to me that the layer based approach X-plane has works very well — mesh, orthophoto, overlays, 3d scenery ( library and user created objects ).

IMHO the scenery developer should “own” their tiles, but be able to specify their layer choices… keep sim mesh or replace it, keep sim basic textures or replace with new ortho, keep sim overlays or replace with new ones and supersede or blend with 3d objects on the tile.

Having a Xorganizer type gui to add-remove scenery, including the ability to globally and locally ( per tile ) select what layers are turned on, or should be used would be v helpful.

Will scenery developers be able to define the type of lights in the scenery ? For example, approaching Louisville, KY at night, you can easily pick out the airport because it has brigher and whiter lights than the rest of the city.

Scenery developers can customize all aspects of the scenery lights.

Hey ben!

Thanks for this wonderfull insight, it looks brilliant.

Few questions though:

– I use Xplane since v11 vulkan but I saw some shots of northern lights with previous versions, with the new atmosphere and lighting I wonder of there any plans to bring them back?

-Regarding night shots, any plans for some light pollution over urban areas, maybe based on weather and OSM/other data?

– How does the moon look in the new photometric pipeline and atmoshpere model? In terms of moon phases and overall night lighting as a result, on clouds and scenery?

– are shadows at night considered?

Thanks again ben!

Howdy!

Those screens are looking pretty sweet!

Please add lighting flashes to your list of goodies!

Cheers…

That’s for XP13 newest lighting model.

What about those three little words: Performance, Performance, Performance??

Excuse a ‘noob’ comment, but I seem to remember somewhere in the forums, a mention that this new lighting engine is going to correct the ‘ambient light in the cockpit’ and in other words, I’ll be able to read my instruments instead of shadows blacking them out in most weathers !

Could you just confirm ? The above screenshots still show a lot of instruments overshadowed … er .. by shadow ?

thanks Ben.

Binky

It’s something we’re still working on – the new engine made it WORSE because the contrast levels between sun and shadow are now at real world levels, which are quite extreme.

This has bothered me over the weekend – how on earth do you expose for two things on one screen?

I’m guessing thats the problem – the same as taking a photo of a person in a room in front of a window. Do you expose for the dark person or the bright window.

Idea: Mandate VR from now on. Solved.

VR doesn’t help – you’d need eye tracking. In real life your eyes only expose for one thing – but that thing can change based on exactly what you are looking at.

A friend of mine who majored in CS at McGil said they had an eye tracker (insane to have since this was twenty+ years ago) and someone wrote a program to change text in real time except in the center of gaze. The result was apparently quite maddening. the text was clearly changing _and you could never see it happen_.

This is really an interesting topic.

Will there be some kind of locally based algorithm (different exposure/tone mapping on different parts of the screen)?

Or will it be a just one smart tone mapping for the whole image, that tries to maintain enough contrast in the important areas (instruments etc.) and adopts over time to the current light levels?

Anyway, if the result looks as great as the Cessna raindrops screenshot above, it will be just amazing. Thanks for the insights and the beautiful moments we will have flying into a sunset with XP12!

Thank you Ben,

for a very detailed explanation of the work required to better render light in 12. I look forward to all your blogs. An off topic question, are there any plans to make it easier for a unskilled future user to change ICAO call letters and numbers on wings, tails and bodies of aircraft. As far as I was able to figure out presently one has to convert a dds to a png clone off the existing #’s text edit in new ones then convert back to a dds. This has to be done for multiple files in the aircraft livery object folder. It took this old fart a complete day just to do the daytime textures on the stock Skyhawk.

Not right now but it’s a good request – one of the tricky things is: where does the _font_ come from that sets up the tail?

Looks great! Hopefully, the AMD driver issue with a flashing screen on an RDNA2 6900XT GPU goes away with certain plugins, aircraft, etc.

This makes me wonder :

Will it be possible to plant blue reflector on the sides of the taxiways?

Indeed, many airports have no blue lights, but only reflectors, for obvious cost reasons. The visual result is significantly different and makes nights much darker, in the part of the scene that is not lit by landing and taxi lights…

Man, I wish there was a “hand you guys a pile of cash” to get this early option.

I am really looking forward to the immersion these atmospherics provide. V 10/11 helped with sense of scale and motion. 12 looks like it will trick the brain into a sense of “there”