For a while we’ve been getting the rare report from a user that our installer won’t download – usually it dies mid-download on a given file.

(Our servers transfer a complete 600 MB demo copy of X-Plane once every 8 minutes, all the time. So if we get a report of a problem from one isolated user, it’s almost always specific to the user. Systemic problems like a bad server generate a ton of email.)

One user stuck with us through some via-email debugging and we finally figured out one of the causes of failed downloads: popup blockers!

The X-Plane installer is built around simple off-the-shelf technology where possible. In particular, files are zipped and sent via http. This means we’re using openly available existing standards, and we get to leverage free code. (The biggest win is being able to use “plain old apache” for serving the installer.)

To the operating system and any firewalls involved, the X-Plane installer doesn’t look that different from a web browser. It goes to a web server and requests a big pile of files, a few at a time.

It turns out some popup blockers will detect patterns in URLs and automatically substitute the web content! So we go out asking for an object called com_120_60_1.obj.zip and the popup blocker decides that (perhaps because it’s a zip file and has 120_60 in it) the file shouldn’t be made available. It substitutes a 1-pixel black GIF file that the browser can show inconspicuously..

Of course, that one-pixel black file doesn’t look anything like a zip file – when our installer gets it, the installer has a fit.

This bug was a mystery before – what would cause our servers to send just one user a tiny GIF file, and only in one case. Now that we know that the software is designed to zap web advertising, it makes perfect sense.

With X-Plane 860, we now mark the X-Plane executable as being capable of using 3 GB of virtual memory on Windows. This means that if your copy of Windows can support large address spaces and you have a powerful enough PC, you can now use more add-ons. (Mac and Linux have always gone to 3 GB by default.)

You can read more about setting Windows up to handle large address spaces here. Please don’t try this if you don’t know what you’re doing, and please don’t contact our tech support if you destroy your copy of Windows — we can’t support the whole OS.

Please note that this change does not improve the hardware capabilities of your computer – it only enables X-Plane and Windows to take full advantage of what you have. You will not see a performance boost because of this.

I’ve been meaning to write this blog entry for about a year now. X-Plane 8 allows the airport surface area to be sloped. Here’s some of the back story and details.

Back when Austin and I were doing the design work for teh X-Plane 8 scenery system, we made a decision to allow sloped runways. The issue is that flattening the airport area requires the sim to edit the mesh on the fly, something we wanted to avoid.

(X-Plane’s scenery system is based on removing the editing of scenery from the sim itself…”X-Plane is not a GIS”. I did some slides on this once, I’ll try to post them soon.)

Instead we decided to simply drape the airports over the terrain, no matter how it was built. We figured that the sim’s engine could handle this (as it turned out, there were bugs that were fixed in the 8.20 patch) and in real life runways are often quite sloped!

Unfortunately when theory meets practice, things can get ugly…the biggest problem we saw was that in the original set of DSFs, the underlying terrain was very bumpy, and the smoothness requirements for a plane to take off are very high. (Flattening is also necessary to match the ground height with other sims for online flight.)

So we retrofitted the X-Plane engine with terrain flattening for airports. The flattening engine is meant as a last resort, to get absolute flatness and repair an already-flattened DSF. Its goal is not visual quality, but rather speed — that is, we can’t take 10 minutes analyzing the DSF each time we load one, or the sim will freeze pretty badly. (If this requirement that the flattener be fast ever goes away, we could do a much nicer job of flattening.)

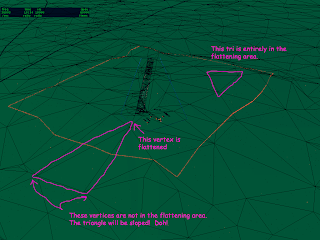

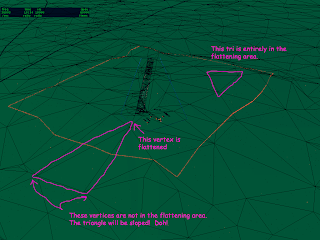

The current flattening engine has two unfortunate properties designed to keep it fast:

– It flattens an area that is larger than the airport surface area (it rounds up) and

– It flattens vertices that are in the flattening area, not whole triangles.

The first limitation means that it may crush mountains around the airport, and is not appropriate for airports that are embedded in complex, hilly terrain.

The second limitation needs more examination. If a mesh triangle is partly inside the flattening area and partly outside, then the triangle is not flattened – one vertex is moved, and the others aren’t, which cause it to be sloped.

In these pictures, the blue lights represent the airport area perimeter, but the red lights show the full area that is flattened. I have artificially set the airport elevation much higher than surrounding terrain, to make the flattening obvious. Notice how some triangles become highly sloped!

When we make the global base scenery, we use the default airports from Robin’s database. So even if you do not want to submit your custom airport layout to Robin’s database, consider submitting some kind of layout to Robin. If an airport is present in the default scenery, then the area will be pre-flattened, which makes the sim’s flattening both work better and maybe even unnecessary.

Also, you can use the 130 code in a custom airport area to increase the airport boundaries, increasing the amount of flattening. But this is a mixed blessing – as you can see the mechanism is very imprecise. If you do use a 130 code in a layout and you submit the layout to Robin, please remove any 130 boundaries that have been set to a large area to flatten an airport.

I realize this blog post will probably just inflame a bunch of email about how the scenery tools aren’t available yet, but I’ll answer the question and take the flames, because it’s a fair criticism and scenery tools are a fair feature request.

The long term direction of scenery tools is this:

- Scenery tools will be separate from the X-Plane distribution, free, and open source. (This separation allows us to post scenery tool source without posting X-Plane source, and to use GPL code in the scenery tools.)

- A few very basic editing functions (like adding nav-aids) are integrated into the sim to allow instructors to correct nav data during a training session.

- WorldMaker therefore is no longer a scenery editor at all.

So why haven’t we killed it? We’ve been tempted to. But it will serve a long term purpose in the scenery tools ecosystem: it will be a small-footprint 3-d scenery previewer.

Because the scenery tools don’t use X-Plane code, the scenery tools will have two limits to their previewing capabilities:

- There is always the risk that with different code, the tools will preview scenery differently from X-Plane’s final render.

- Because the scenery tools don’t use X-Plane’s renderer, we basically have to rewrite viewing code in the scenery tools from scratch. That’s a lot of code, so for a while the preview in the tools will be limited.

Running X-Plane and the scenery tools at the same time isn’t a great option – since X-Plane loads a lot of scenery, and a weather model, and a plane, and then tries to run at max fps, it tends to be a bit of a pig in terms of system resources. WorldMaker will be a viewer that can reload your scenery quickly so you can have a 3-d view of what your end result will look like that will match X-Plane.

Posted in Tools

by

Ben Supnik |

Just a gentle reminder: please do not consider anything in a beta to be final!

By this I mean: there is a risk that file formats that are new to the current sim (860) could further change during the current (860) beta run.

So…if you are working on scenery that depends on new 860 features, please do not ship your final scenery until 860 is finished! This way you can be sure you’re compatible with the final formats.

If your scenery uses 850 features and 860 breaks them, please report a bug, as 860 should handle any legal scenery that 850 handles.

(This goes for plugin datarefs too!)

I found the reason for frame-rate loss for Logitech sticks…everything in this entry is Mac specific.

X-Plane has (for a long time) done a rather poor job of parsing the HID descriptors that

the driver provides for USB joysticks on OS X. However it turns out that in the case of Logitech, after we found the 4 axes the joystick usually has (pitch, roll, yaw and throttle) we then went on to misparse a bunch of strange stuff at the end of the stick as more axes. It turns out that reading those axes causing some kind of huge framerate loss. I don’t fully understand this, but given the HID spec I’ve seen I’m not hugely surprised.

Why is this x86-Mac specific? I don’t know! Why is this so much worse in 860? Well, in 860 we raised the number of axes from 6 to 8. With more axis slots, we tried to read more incorrect parts of the logitech stick, for more fps loss.

The solution is one that’s been a long time coming: I’ve rewritten a bit of our Mac HID-parsing code to do a better job of figuring out what’s a joystick element and what’s not.

The bad news is: for the next few betas there may be some broken joysticks. If you have a joystick and it stops working in the next beta, please file a bug and include as much specific info about your joystick as you can.

The good news is: I think we will get almost all of the fps back on x86 Macs – Logitech users will not need to get new sticks.

A side effect is that we should no longer have “dead” slots – that is, buttons and axes that didn’t do anything. This should allow you to use more of your X-52 if you have one.

For X-Plane 860 beta 6 I think we may change the way the airport lights setting works. Since

(despite my previous rantings) there is a lot of discussion of performance based on settings (e.g. “I used to get 40 fps with this menu pick, now I need this other menu pick”) as opposed to based on what the sim is actually doing, allow me to go off in some detail about what’s going on under the hood.

During X-Plane 850 and 860, I tried to fix a number of long-standing “quality” issues, where the sim had small artifacts. There is a fps penalty to fixing these artifacts that I thought was small, but the message is clear:

- Some users are flying X-Plane on lower-end systems and can’t spare a single fp.

- A lot of users really don’t care about the quality issues and would gladly trade back the visual “improvement” (which doesn’t seem like an improvement after living with the issue for 4 years) for those few fps that mean the difference between clear skies and fog.

So…with that in mind, let me explain runway lights for 3 versions of X-Plane.

X-Plane 840 and earlier

In X-Plane 840, lights could be textured or untextured. This was controlled by a simple checkbox “draw textured lights”. A few lights (on the airplane, for example) were always textured because we thought they were few in number and important visually. The rest was decided by the checkbox.

No airport light ever had a 3-d “structure” (e.g. you couldn’t see the light housing and support rod).

No light was ever drawn using hardware acceleration, even if the graphic card had pixel shaders; we simply didn’t have the code. Therefore textured lights ate up a lot of CPU power and thus ate up a lot of framerate. But life was simple!

X-Plane 850

With X-Plane 850 things got a lot weirder.

- We rewrote the lighting engine to optionally use pixel shaders if present. This improves performance of textured lights a lot. In fact, on a given machine with pixel shaders, textured lights with hardware acceleration are faster than non-textured lights without hardware acceleration. (For hardware accelerated lights, the texturing doesn’t cost anything compared to hardware accelerated untextured lights.) It’s important to note this performance fact!

- Sergio created a bunch of 3-d object lighting fixtures for the runway environment. They have a low LOD, but up close you can see the actual lighting structure.

So what to do about that setting? Well, basically with the check box on we did the nicest looking thing, and with the check box off, we did the fastest thing. (The check box’s label doesn’t do a good job of representing this.) We thus have four possibilites:

- Checkbox off, no pixel shaders: we draw untextured lights using software, no objects.

- Checkbox off, with pixel shaders: we draw textured lights using hardware, no objects.

- Checkbox on, no pixel shaders: we draw textured lights using software, with objects.

- Checkbox on, with pixel shaders: we draw textured ilghts using hardware, with objects.

This is plenty confusing if you ask yourself “what is this checkbox going to do” – it depends on your hardware. But the rule is quite simple if you consider the intent: we either give you the fastest (off) or nicest (on) rendering we can.

X-Plane 860

With the next X-Plane 860, we’re going to use this checkbox to control multiple aspects of the sim, but always with two choices: either prioritizing visual quality, or prioritizing framerate.

So this option will not only pick the fastest (or nicest) light code and turn the objects on and off, but it will also, for example, turn on or off per-pixel fog (something that looks nice but is slower than the old per-vertex fog).

I’m not sure everyone will like this, but I think it will meet some important needs:

- It will make it obvious how to set the checkbox. Either you want speed, or quality.

- It keeps the config interface simple for new users.

- It gives us a way to tie in a number of small optimizations that add up when taken in concert.

It seems to me that we’re seeing the haves and have-nots. If you’ve got a Core 2 CPU and a 7000-serise GPU, things like per-pixel fog are chump change. If you’ve got a G4 with a Radeon 9200, you need any speed you can get. So hopefully this change will make the UI clearer and help us meet everyone’s needs.

I think the framerate test may be the best piece of code I’ve ever written for X-Plane. It speeds up my own work checking X-Plane’s performance (and removes the risk of careless error), allows us to easily collect user-based stats, and has changed the dialog on performance in the beta from he-said/she-said (the result of having no precise quantitative measurements) to a fact-based dialog box where we can discover problems more quickly.

Today in a noble sacrifice (using this card is nothing less than torture) I put a GF-FX5200

back in my G5 to look at bugs and performance. (The terrain night lighting and landing lights should be back in all nVidia hardware with shaders in the next beta.) Some things I’ve learned:

Turning off GLSL shaders boosts fps from 46 to 55 fps with my Radeon 9600XT but goes from 22 to 45 fps with the FX5200. From that we can learn:

- The penalty for GLSL shaders is really really high on the FX5200.

- The shader implementation for the FX5200 just doesn’t run at the same speeds we see in R300-based cards…it’s not in the ballpark.

Looking at a profile of the sim, with the FX5200 running shaders, about 50% of the time is spent waiting for the graphics card to chug.

Other things I’ve found:

- GLSL shaders are slower on terrain, but still worth it for runway lights – having those be hardware accelerated is definitely a positive.

- Per-pixel fog doesn’t affect fps on the 9600XT but is a measurable penalty on the FX5200.

- The landing light also hits the FX5200 about 20% – again it’s negligible on the 9600XT.

The moral of the story may be: we need a way to not use shaders for terrain on the FX5200. Between the little things that shaders give us (that we can’t use) that cost too much and the overall lack of performance, GLSL terrain and the FX5200 may not be a good idea.

If you have an FX5200 and OS X 10.3, there may not be any benefit to updating to 10.4 – you’ll have the option of using GLSL shaders but you may not want to.

If you have a G5 and an FX5200 and the option to put a new card in, you may want to consider it – you’ll almost certainly get a fps boost (especially if you use something like an X850).

One user posted this comment in response to my rant on framerate…

“What I wonder in X-Plane and FS2k4 as well is, why there is no better automatic regulation which automatically set object density, object quality and so on based on the current frame rate….”

This is a good idea that has been suggested before. However when I tried it, it worked very poorly for two reasons:

- Because we reduce visibility as well when fps fall, if we also tie object density/distance to framerate, then we have two separate variables responding to framerate. The result was oscillations. Think of framerate and visibility as a (hopefully) dampened oscillator. With two variables, getting the oscilation out is a lot harder.

- the relationship between object density as the sim controls it and framerate is not a static one, because object density (relative to other scenery complexity) varies a huge amount with locale. In the mountains, backing down objects won’t save your framerate. In the city it will. So coding the “spring factor” for that oscillator is very difficult.

That doesn’t mean we won’t try this again in the future – that’s just what happened when I tried my first naive attempt.

Other comments reflected users either hoping for more fps from existing hardware (there’s a lot of that on X-Plane.org) or saying that X-Plane runs well on their modern shiny computer.

Here’s some stats for you:

- Radeon 9200: 1000 MT/sec 6.4 GBi/sec

- GeForce 5200 FX (64-bit version): 1000 MT/sec 2.7 GBi/sec

- Radeon X1950XTX: 10,400 MT/sec and 64 GBi/sec

- GeForce 7900 GTX: 15,600 MT/sec and 51.2 GBi/sec

(These numbers come from Wikipedia – I make no promises.) The first number MT/sec is mega-texels per second and represents how many millions of pixels* the card can fill in. GBi/sec is gigabits per second of data transfer from VRAM to the GPU. Neither of these are perfect predictors of card speed (but they do limit your ability to run with a lot of FSAA) but they do illustrate something:

The difference between the speed of the smallest and biggest graphics cards is over 15x for fill rate and over 25x on memory bandwidth! That’s the equivalent of people trying to run with a 3 ghz and a 120 mhz CPU! Anyone running with a 120 mhz Pentium out there? Definitely not – our minimum CPU requirements are ten times that.

In other words, the high rate of technology improvement in graphics cards has left us with a ridiculously large gap in card performance. (In fact the real gap is a lot worse – the 9200 and 5200FX are not even close to the slowest cards we support, and I haven’t even mentioned the new GeForce 8 series, or pairings of two cards via SLI or Crossfire.)

* Pixel or texel? Technically a texel is a pixel on a texture, at least by some definitions, but perhaps more important is that these numbers indicate fill rate at 1x full-screen anti-aliasing…you would expect them to fill screen pixels at roughly 1/16th the speed at 16x FSAA. This is actually good – it means that FSAA can help “absorb” some of the difference in graphics power, making X-Plane more scalable.

If I could have a nickel for every time a user has asked me “what machine do I have to buy so that I can max out all of the settings in X-Plane”…

The problem is simple and two-fold:

- X-Plane’s maximum rendering settings can sink even the biggest computers. Why is X-Plane’s maximum so “overbuilt”?

- The rendering settings are not good predictors of performance. Why the hell not?

The answers are, roughly in that order, “Because we can’t predict the future” and “because it has to be that way”. Here’s the situation…

The Maximum Limits

Some of X-Plane’s maximum settings are design limitations. For example, your anti-aliasing can’t exceed your card’s capabilities, and the visibility can’t exceed the loaded area of scenery.

Other limits are based on algorithmic limitations – there are as many objects in New York City as we could possibly pack in. We figure you can always turn down object density if there are too many.

Still other limits are based on guesses about hardware. We could have made the mesh or less complex – we chose a mesh complexity for the global scenery that we thought would be a reasonable compromise in frame-rate and quality for the v8 run based on typical hardware. This is just a judgement call.

So…how big of a computer do you need to run X-Plane with maximum objects? I have no idea! We honestly didn’t give it any consideration. Our strategy was:

- Make sure the maximum object density the renderer would produce would not exceed our disk space/DVD allocations. It didn’t…because objects are only in cities, they contribute only a tiny fraction to the size of global scenery.

- Make sure that we could turn object density up and down easily.

- Ship it!

We figure you’ll turn objects up and down until you get a compromise of frame-rate and visual quality that you can live with. If you can’t find a compromise, it’s time to buy a bigger computer, because we can’t give you something for nothing. All those objects require hardware to draw.

And I would go a step further and say it’s a good thing that the maximum density of objects is very high. When Randy (our sales/support/marketing guy) got a new PC, a very nice one, but not even the most ridiculous gaming box possible (and this was months ago) he reported that on the default settings he can fly the Grand Canyon at…283 fps!

My point is that the hardware keeps getting faster and we can’t predict where it will go. So it seems like a safe bet to leave the maximum settings very high for future users.

So How Insane is Insane

The other problem is that rendering settings don’t correspond well to machine power. I can’t tell you that a 3 ghz CPU can handle “tons” of objects. The problem is that hardware performance is a composite of a number of subsystems, and scenery complexity varies by region and even by view angle.

Even the slightest change in camera angle can have a pretty big effect on performance. Combine that with different types of scenery elements (objects in some, beaches in other, mountains in others) in different regions and it becomes very difficult to predict how well scenery wil work on a given computer.

A Good Value

Given all this, we don’t try to come up with correlations between the settings and the hardware. Instead we simply try to make X-Plane run as efficiently as we can, and try to verify that you get a “good value” – that is, a lot of graphic quality relative to the power of your computer. We have to trust that you’ll buy as much computer as you want and/or can afford, and we try to give you the best look we can for it.

So if you are shopping for a new computer, you can go for the “Rolls Royce” strategy, where cash is no object and you maximize every component (even if paying a huge premium for brand new technology) or you can go for a “good value” strategy, trying to find the best price-per-performance points.

I’ll close this off with a few tips on buying:

- If you are buying a new computer, be sure you have a PCIe 16x slot! Any decent motherboard should come with this — if it doesn’t, you’re not getting a good computer. A PCIe 16x slot for the grahpics card shouldn’t raise prices too much.

- Get 1 GB of memory minimum – X-Plane will run with less, but it helps, and memory isn’t that expensive these days. A new computer will be fast enough that the system can use the extra memory. I get my memory from Crucial – it’s good quality and often cheaper than what you pay if you let the computer manufacturer upgrade you.

- In the US for Windows/Linux, look for a graphics card between $100-$200 – that’s the best value range for graphics cards. Get an ATI or nVidia card (nVidia if you’re using Linux). I don’t buy my cards from PriceWatch but I find it a useful listing of the price point of a lot of graphics cards.

- Don’t get a video card with HyperMemory or TurboCache.

- Check the “suffix” of your graphics card on Wikipedia – there are tables for the clock speeds for both ATI and nVidia cards. An nVidia “GT” card will cost you more than an “LE” card, but will be clocked a lot faster and given you better performance.